Steve Loyka: [00:00:00] Good afternoon everyone. My name is Steve Loyka. It’s my privilege to welcome you to our masterclass. This is my colleague Jen Rodstrom. And together we’re excited to pick up the conversation that was actually begun on the main stage this morning. And I wanna get right into it ’cause we have a limited amount of time.

And so I wanna give you a quick rundown of what we’re gonna cover and what you can expect in today’s session. So we’re gonna start out by reinforcing why businesses really need to get serious about thinking beyond surveys. We’ll talk about the places along the customer journey, where your customers might be telling you more than you think about their needs, expectations, motivations, where they’re struggling, and how you tap into those signals.

We’ll talk about what signals you may want to start prioritizing based on your organization or your program’s goals, and then we’ll talk about how you engage and enable. The broader enterprise in your business to ensure that you’re contributing to and realizing tangible business outcomes. I like to start off these presentations with questions.

So we’re gonna do this by a show of hands, but how many of you feel confident that your customer surveys are telling you [00:01:00] everything you need to improve upon the customer experience and deliver on your customer’s expectations?

Jen Rodstrom: No one wants to raise their hand. That’s after they heard that this morning.

That’s

Steve Loyka: exactly, that’s why you’re here, right? Absolutely. So tell me, show of hands, how many think your surveys are telling you only part of the story, obviously, right? How many of you have ever looked at your survey data and thought, this doesn’t align to what I’m seeing in actual customer behavior.

Absolutely. So I think it’s obvious that organizations like yours and others are feeling the limitations of a survey only, or a survey heavy approach to customer feedback collection. And the reality is you’re not alone, right? In addition to all your peers in this room, your customers are feeling the limitations too, right?

They’re tired of being asked for feedback that they don’t think is actually gonna contribute to any real tangible change for them and their future experiences, right? They’re skipping surveys. Or they’re giving half-hearted rushed answers to, to surveys. They’re skipping them and they’re saying one thing and doing another.

That’s that disconnect [00:02:00] between stated intention and the actual customer behavior. So where does this leave us? It leaves us with a fragmented view of our customers. Over the last two decades, response rates to customer surveys have plummeted from around 30% to below 10% on average today. Too often these surveys represent a negative touch point in the broader customer journey because they feel disconnected from the actual experience that your customers are having.

And they have that perception that’s been informed by previous experiences that nothing’s gonna change as a result of the feedback that I give. So why bother responding at all? And so I wanna give you an illustrative example here. Picture this, that you, a few weeks ago, you used your reward points to upgrade yourself to business class for the trip here, right?

And you travel here to Las Vegas and the experience in business class is. Broadly stellar, right? But when you arrive here in Las Vegas, you realize that the airline has lost your baggage or they’ve redirected your baggage, right? And so you show up here today in your travel close, and you [00:03:00] receive an email with a question, how likely are you to recommend this airline to a friend?

We’ve all gotten that. So be honest. Who’s responding to that survey? Very few of us, right? One, and is it because you’re like me and you do this for a living and you wanna see how poorly designed or how well it’s designed, right? Yes. But the majority are not gonna answer that. If they do, it’s not gonna tell the full story of that booking to arrival travel experience.

And so it leads me to my next point. I. The feedback we collect via surveys largely results in inconsistent data. The majority of respondents to surveys, as we all know, are represent the sort of emotional extremes of our customers, right? They’re either the most satisfied or the most dissatisfied. And you couple that with poor survey design, poor sampling strategies and the data you get back is hardly actionable.

And hopefully you had a chance. I dunno if anybody show of hands had a chance to attend our colleagues masterclass this morning around designing impactful surveys. If not, you’ll catch it on replay in the coming days. We’ll have those recorded and sent out. But I plugged this [00:04:00] session because good survey design coupled with alignment to broader customer communication strategies and integration with operational data that can help contextualize the feedback that you are getting can alleviate some of these limitations.

But the last limitation that I wanna highlight before we get further into this is the lack of predictive value, right? Surveys reflect past experiences that have occurred in multiple channels across dozens of touchpoints, and they offer limited lagging insight even when customers respond thoughtfully you, you often don’t get the entire story of their experience with your brand or they’re telling you something and then they’re going on to do something else, right?

And so that gap between stated intention and actual behavior. Is in influenced by a number of different factors that happen across those subsequent touch points. And so what’s the alternative? You heard about this morning, the future of customer listening is not about asking your customers about the experiences they’ve already had with you, right?

It’s about going to where the [00:05:00] experiences lived and the conversations are happening, and listening to the signals they’re already giving you and meeting them where they are with an experience that feels like you understand where they’re trying to go and what they’re trying to accomplish. Last question to the audience.

How many of you have been part of a large CX initiative that was driven primarily by survey feedback? Anyone. And how many of you ended up with outcomes or results that didn’t quite match what you had expected? I. So I wanna introduce you to generic insurance. This is a company that’s been struggling to grow costs, have outpaced revenues driven largely by an inability to retain customers and their relationship.

Surveys are telling them that they’re, they got an NPS roughly around 25, largely below the industry average. Now, when the executive team meets to discuss their annual goals. They identify a few familiar favorites, right? We can all relate to this. They wanna reduce costs, they wanna drive revenue, and they wanna improve loyalty.

So they go out [00:06:00] and they engage their channel leads, their line of business leads, their insights teams, and they say, what can we use to determine how we’re gonna solve for some of these and how we’re going to meet these corporate goals for the year? And what they get back from their insights team and their channel leads is that the highest cost to serve is in the contact center, however, it’s also where they see some of the highest scores for agent satisfaction. And then as I mentioned earlier, they have their relationship surveys that are telling them that likelihood to recommend is quite low, right? And so ultimately the leadership team decides that the best way to bring down cost and improve the customer experience is by developing a new claims experience.

They develop an entirely new digital ecosystem, a new app and they launched this with big fanfare. Now, six months later costs revenue loyalty. All remain the same. So what are they missing to understand that? Let’s meet Joe. So Joe just got into a fender bender on his way home from work, and in the midst of his frustration with himself, he [00:07:00] remembers that splashy communications campaign announcing the new claims experience and the launch of the app.

At the time, he had downloaded the app just in case, and so he pulls out his phone. And immediately begins to have issues with the app. The fields are too small. He’s zooming in to read the instructions, and ultimately the workflow just isn’t working the way it’s supposed to, right? So he decides to wait till he gets home to complete the submission when he gets home.

He logs into the insurance portal and he realizes that none of the steps that he had actually completed were saved, right? So he is starting again, and so frustrated. He logs on and he tries to submit all the claims information. He has questions about some of the fields and the information that is being asked.

Things like location in which he interprets to mean, where’s vehicles registered and where he’s located. And then ultimately at the end, he goes to submit. Pictures of the accident as is required, but ends up hitting a wall again and again. And so he’s navigating back and forth between an FAQ page and steps in the form trying to resubmit those photos and ultimately it just doesn’t work.

So he contacts the chat [00:08:00] bot right where he engages the chat bot. It, and unfortunately all it keeps doing is feeding him again and again, the FAQ page that he was already using. So he decides, he types in speak with an agent. He’s connected with a phone number that he has to then call himself and he’s ultimately connected with a contact center agent.

That agent, though, is extremely empathetic, patient, extremely helpful, right? She says, oh, that location, that’s actually where the accident happened. She gives him a PDF. That he can fill out to further the claims process. She gives him a email address where he can submit this claim and the photos, and ultimately it works for him, right?

And so he goes back to his computer, he does this, and ultimately he’s able to complete the submission. This workaround for this new digital experience, new claims experience has worked, but ultimately it’s bypassed this brand new splashy experience that they’ve developed. Two days later, Joe gets a email with a request for feedback and it.

It says, how would you rate [00:09:00] on a scale of one to 10 your claims experience, Joe thinks to himself? My, I haven’t gotten a notification that my claim was processed, that it was approved, so it’s not over yet. My experience isn’t done, so he ignores the survey. Now generic is largely blind to this entire experience that Joe has had, right?

Tra traversing multiple channels and trying to submit multiple ways to get this claims done. Frustrated though, he goes out and he tweets about the experience and tags generic, right? So at least they’re alerted to a point of frustration. Let’s consider an alternative, though. Him. So instead of asking him about the claims experience, the request for feedback is about the agent experience, which was a positive touchpoint in his journey.

As such, he rates it a 10, right? But he’s still frustrated at the broader experience. In fact, writing in the comment section, your new claims experience is awful, right? And then he goes out and he tweets about it again. So what a generic miss. They missed a lot in this journey, right? A lot of opportunity in which they could have helped [00:10:00] Interdict and further Joe’s claims experience.

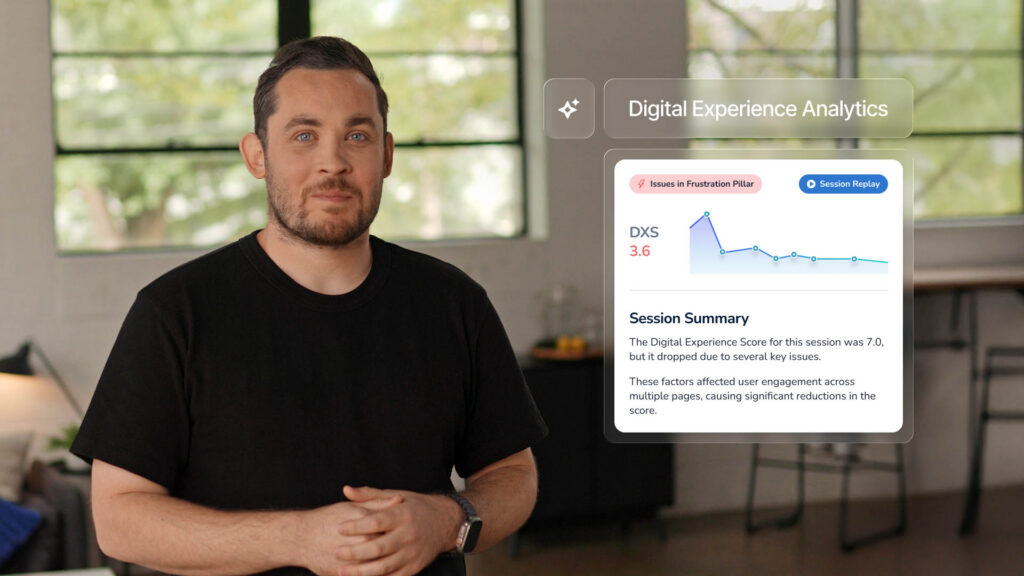

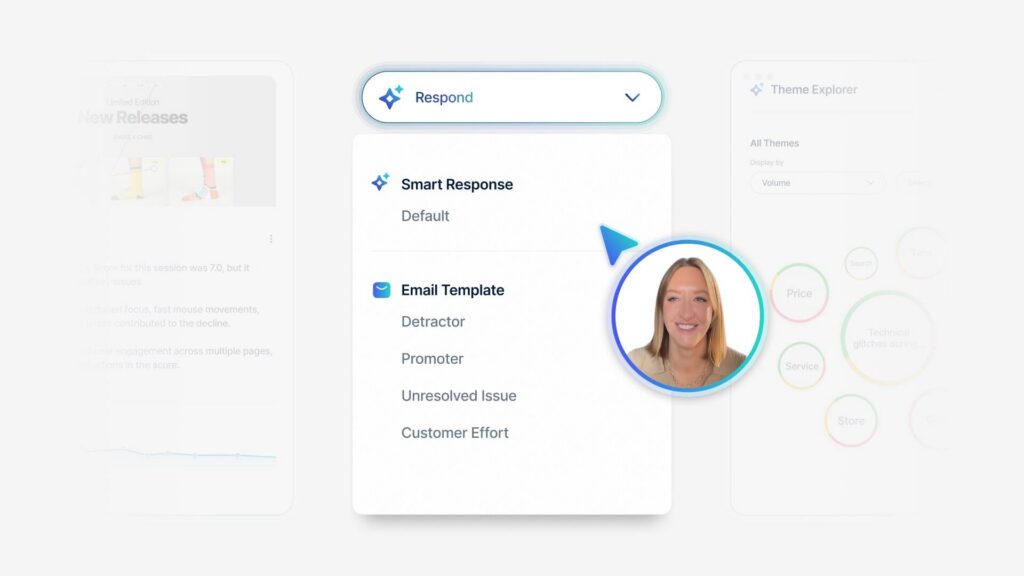

Had they been listening where the experience was unfolding, starting in the digital channel, they would’ve been able to identify where he was struggling in the app. Perhaps Interdicting proactively and helping him complete that claims process. They could have gone back and watched the session replay, or as you heard this morning, use the smart summary to identify what’s driving this broad trend of a self-service abandonment that we’re seeing.

He transitions into the conversational channel. And in that channel, had they been listening there, they would’ve understood that he was struggling with uploading photos, understanding what information was required, and an analysis of those chat transcripts. Could have helped them train the chat bot more effectively.

Update FAQ pages and overall improve the experience for future customers who are going through the same challenges. An analysis of the call to the contact center. Would’ve also identified all of these other challenges that he encountered on his way to submitting that claims experience [00:11:00] and given context to what the contact center agent ultimately just.

Flagged as claim support, right? And in addition to knowing that this was a claim support service request, they would’ve been able to identify all of these other precursor issues and gone upstream and tried to address those for future customers. Now, social listings largely a reactive channel, but by understanding what problems are servicing, you can proactively identify solutions for future issues, thereby influencing future customer behavior and influencing overall brand reputation.

So there’s a lot of opportunity to understand what’s happening within this journey that you’re currently not seeing or hearing. And so I know this feels like a lot of new channels, new touch points to potentially be listening at, right? And investments that you have to now go make a case for, but there’s real opportunity here to contribute to tangible business value, like cost reduction and improvement in loyalty and retention.

And so to walk you through this and [00:12:00] understanding where to start. I’m gonna turn it to Jen who’s gonna talk about how you identify what your business goals are and how those influence what signals you prioritize.

Jen Rodstrom: Terrific. Thank you Steve. Good afternoon everyone. Really excited to be here with all of you.

And I think what Steve just shared with that great generic insurance example has both the why for expanding sources. We saw how much information we were missing and where you can find these. Potential sources of new insight I like about this example is that you were hearing both the business and the customer perspective, right?

And where I wanna dig a little deeper is on that business perspective, because of course, that’s what all of you are most interested in. So during this portion of the masterclass, I really wanna talk through with you, how do you get started? Again, we just laid out a lot. Nobody can start there on day one.

We don’t expect that. And your businesses would probably laugh. I wanna talk about what types of signals you should be collecting. Again, there’s a lot of different things you can be collecting. Even [00:13:00] just adding one new piece of data is gonna have a significant impact. And finally, we need to do this with what’s the value to our organization in mind?

Tying back to those business outcomes, if we wanna be getting the investment we need to make this a reality. So where do you begin? So let’s start with defining the outcomes. We saw this with generic. They listed some of the things that they needed, and I’m sure this list here, many of them jump out as you Oh yeah.

We’re looking into that one. Absolutely. We’re hearing that from the CEO on a regular basis. So we need to be able to answer, why are we undertaking this effort? What do we hope to achieve? And these outcomes aren’t defined in a vacuum. As you all know, the CX team, they’re business enablers, right? You guys don’t own.

A lot of the processes and areas, you’re not contact center leaders necessarily. You’re not the digital owner. So you need to connect with those folks. You help them to connect the dots and drive that value be. And you’re unique because you have that [00:14:00] cross-functional view and you’ve built those coalitions across the organization, which is what makes the cx team and that unit and that whole effort so important.

And we need to engage the stakeholders who are ultimately accountable to whatever that goal is. So let’s say we’re talking about improving the service experience. We wanna talk to contact center leaders. They’re the ones who own that and are gonna ultimately be responsible for metrics around reducing call volume.

Now, as far as how we identify which new signals, that depends in part on what that outcome was. And the reality is we need to go where our customers are. And quite honestly, you can listen everywhere. I don’t recommend you do that to start, but there are a lot of different places where our customers are talking to us.

So if you’re focused on that service quality improvement, you may wanna start with some of those contact center things. It may be chats. Let’s just bring in chat transcripts. We’ll start there. Perfect. It may be calls and you start doing speech analytics and it might be agent notes because they can be incredibly [00:15:00] value if the agents have been coached on the best way to take notes and really.

Understand root cause of why they called in. Together with the relevant stakeholders, you can lay out the how and why. ’cause ultimately, you will likely need to build a business case to get something like this funded. Ultimately, we’re doing this to develop insights from all of that listening.

Now, I recognize there is a step between two and three where you know you have to do that minor thing of finding the data across your organization and accessing it. And I joke because I know this can be like, where the heck. Does it live? It’s in some data warehouse somewhere in a table nobody’s ever accessed.

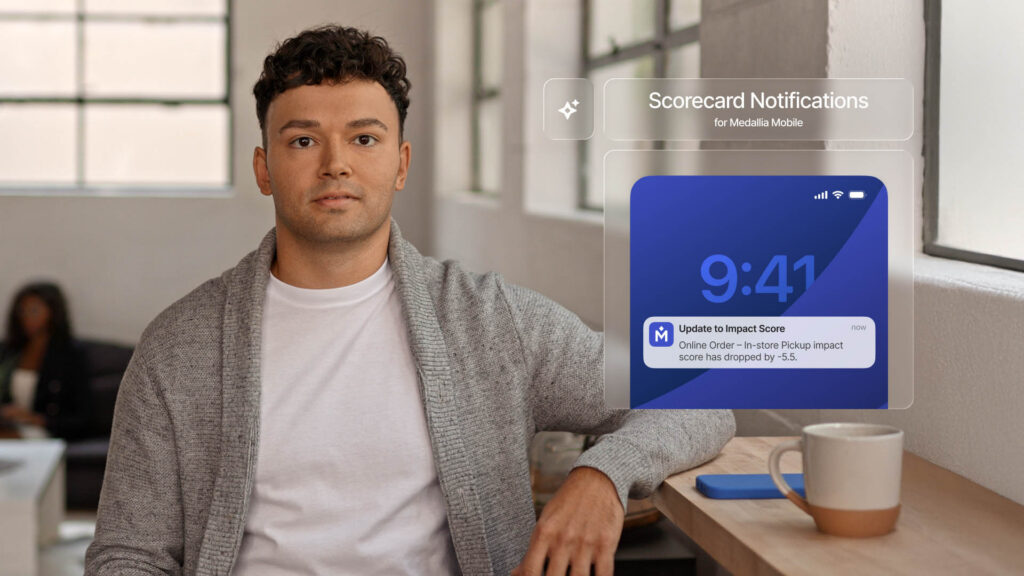

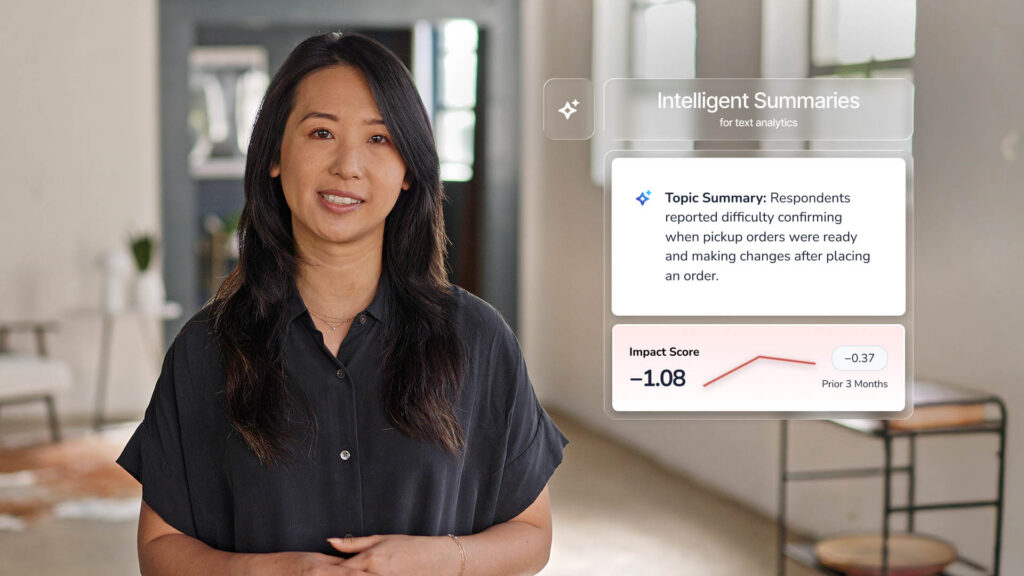

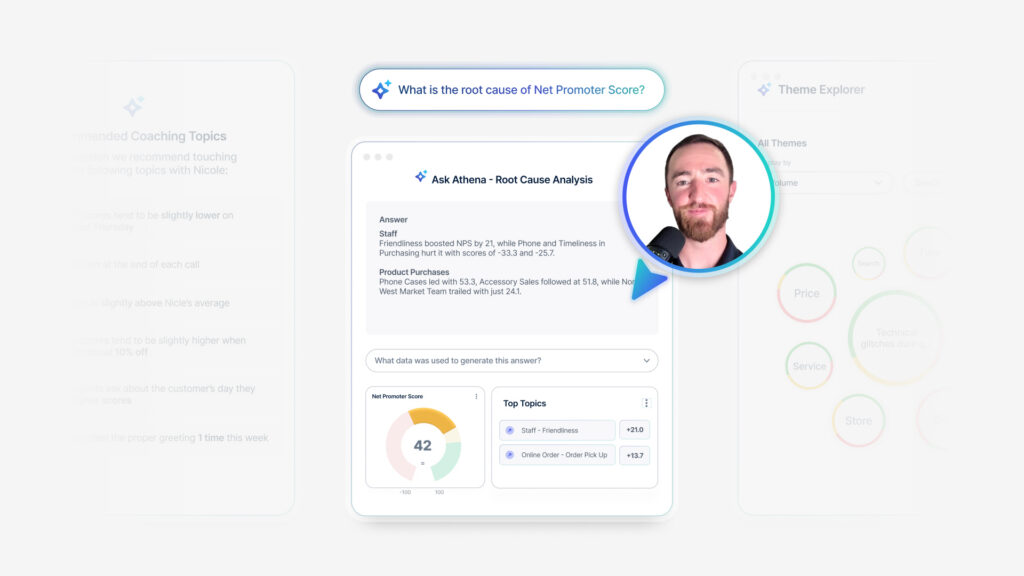

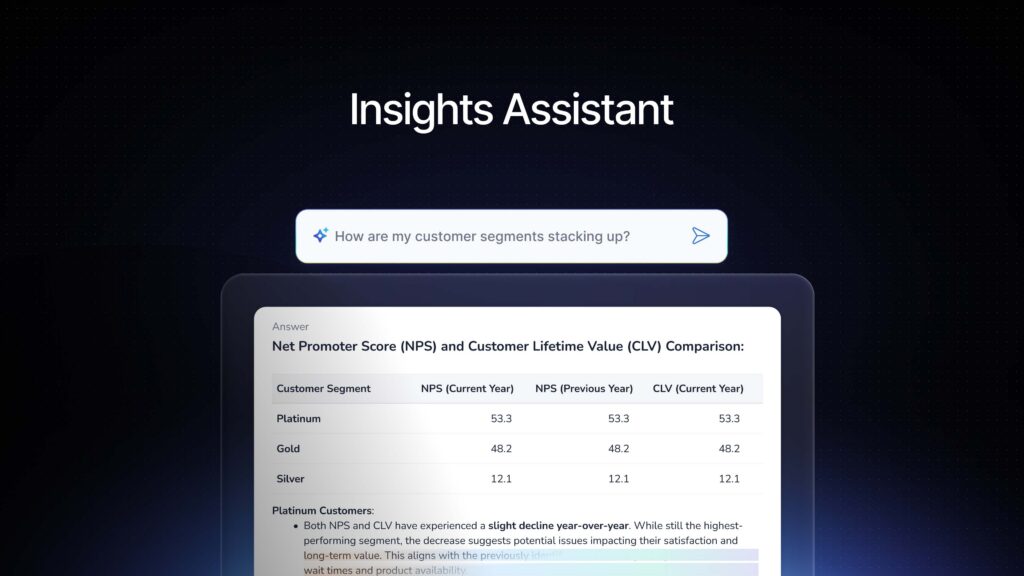

So there’s a bit of an effort that has to happen, but I don’t believe I have it folks in the room today. So we’re not gonna spend too much time there. But you’re going to find it, you’re going to determine what it is and where it is, and it’s going to be brought into Medallia, and that’s where the fun, if you will gets to happen because our AI and advanced analytics will help you learn what it is our customers are saying to us.

Kind of [00:16:00] every day. For example, with surface quality improvements, if you’re doing speech analytics on those calls, you are quite literally hearing the voice of the customer and of the agents who are directly telling you what needs improvement, what’s working and what’s not. By having these insights, both your survey and your unsolicited insights together in one place, you’re ready for the most important step because we’re not just collecting insights because they’re fascinating.

We need to empower action. And I know we talked about this morning. Fabrice in particular brought this point up and how and where you’re going to drive action is very much based on those outcomes defined in that first step. And also what you learned. And I wanna illustrate some of this for all of you with a few examples and then some real world cases of some of our clients.

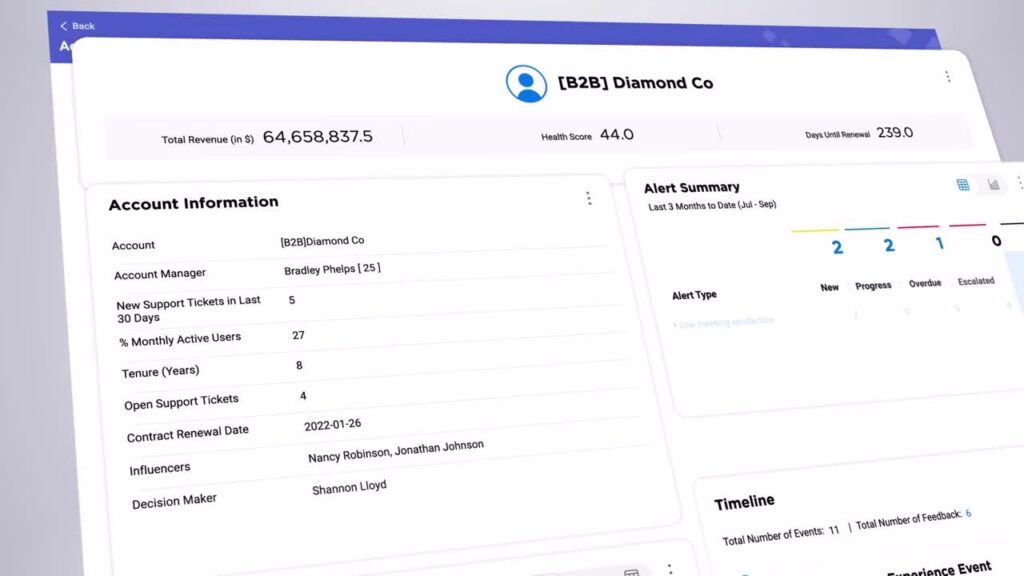

Of how they’re doing this work. Again, to bring it to a level where it’s, I want you and Steve and I both want you to feel like this is doable, and when you get back to the office after this is something you can really think about doing. [00:17:00] So let’s get started. I am gonna be sharing some screenshots of dashboards, and I know dashboards aren’t cool anymore.

I think we heard that this morning, but for now, dashboards exist. So let’s let’s take a look. They look similar probably to things you have seen, but all that’s really different is we’re bringing in some new data. So we look at a retail example. Where the goal or outcome might be something like increasing revenue, particularly in our lagging regions.

Any of you with in-person, multi-unit types of businesses are familiar with this. And with our traditional voice of the customer surveys, we’re doing those post-transaction surveys or the post-visit survey. What’s your valuable, but our more forward thinking clients are also bringing in social media, listening and review sites.

And this augments that direct feedback and creates a more holistic view of what’s happening. And what I really love about social listening is that this is not just for those of you who are in retail or hospitality or restaurant, [00:18:00] those folks, yes, you may have perhaps more to choose from if it’s Yelp reviews and TripAdvisor and Google reviews and folks talking on Facebook and posting their vacation photos on Instagram.

But if you’re in a B2B environment. There’s LinkedIn, there’s X the band formerly known as Twitter. And there’s probably industry forums that you could pull from. In either case, you’re capturing signals from folks who probably wouldn’t take your survey otherwise, and you’d never hear from them. Now, I’d love to hear if anyone here in the room, I’m gonna put you all on the spot.

We’re getting a little tired. Any of you doing social listening right now? Do you mind sharing A nice woman, and it looks like a cream colored top over there, and Jade is gonna come over with a microphone. I would just love to hear about your experience.

Q+A: So right now we’re using platforms like Sprout and Cento and essentially they have their own dashboards.

You can filter by time. How we do it is we do daily mention reports every [00:19:00] single day we’re going on there. We’re looking at what people are saying across X, Instagram, LinkedIn, Facebook. And then there’s some, ’cause I’m from Henry Schein, it’s a dental company. So we’ve got dental town and other forms like that.

So we just listen to that. And then anything major, we just report back to leadership.

Jen Rodstrom: And now are you bringing that in with your survey feedback or is that sort of a future goal?

Q+A: Yeah. It. I, so it’s not a goal right now at all. Very new to this but would love to hear more about how that might be possible.

I didn’t even know that we could integrate that within Medallia. Yes, we

Jen Rodstrom: have folks who are using, or sprinkler, I believe is another common one. And folks are bringing in that way. Thank you very much. Appreciate that example. I think a lot of you are doing. Social listening in one place and your surveys in another with that goal of being able to bring them together.

That was perfect. Thank you so much. I’m gonna move on to an example from digital. Now let’s look at a company, and again, these probably all sound very familiar. They want to improve operational efficiency. By reducing digital friction. Everyone loves that term, digital friction. Most programs today use [00:20:00] digital intercept surveys.

That kind of pop up after X number of pages or after you’ve done something, and that’s absolutely valuable. But for future proofing companies, they recognize surveys are not enough and they are deploying digital experience analytics. You guys all heard some of this morning across their web app and mobile sites.

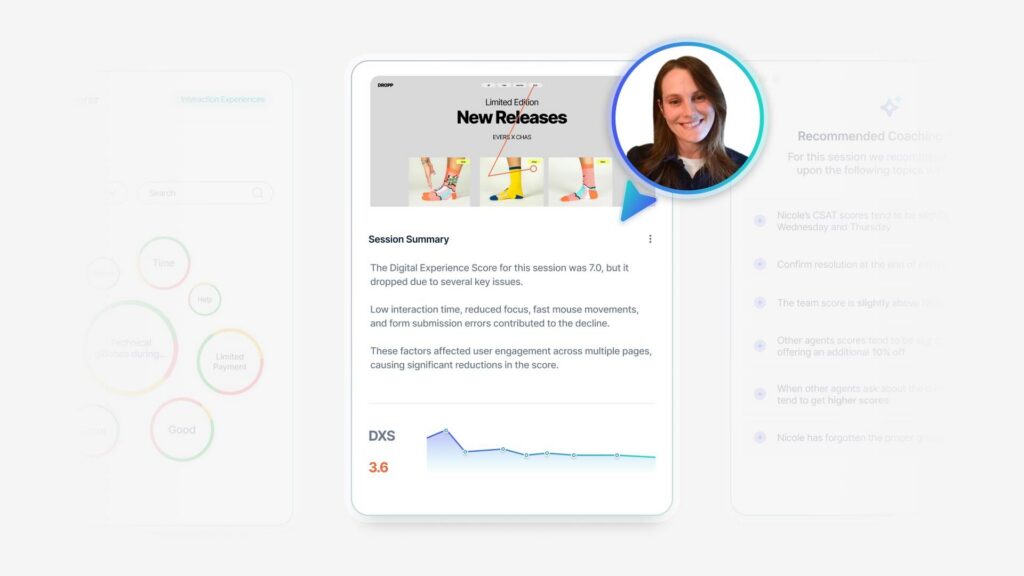

Which is critical because I think regardless of industry you are in, your customers are using digital more and more. Digital listening is also unique in that it captures what customers actually do, not what they said they did, not what they plan to do, but it’s what they’re actually doing. And it’s automated.

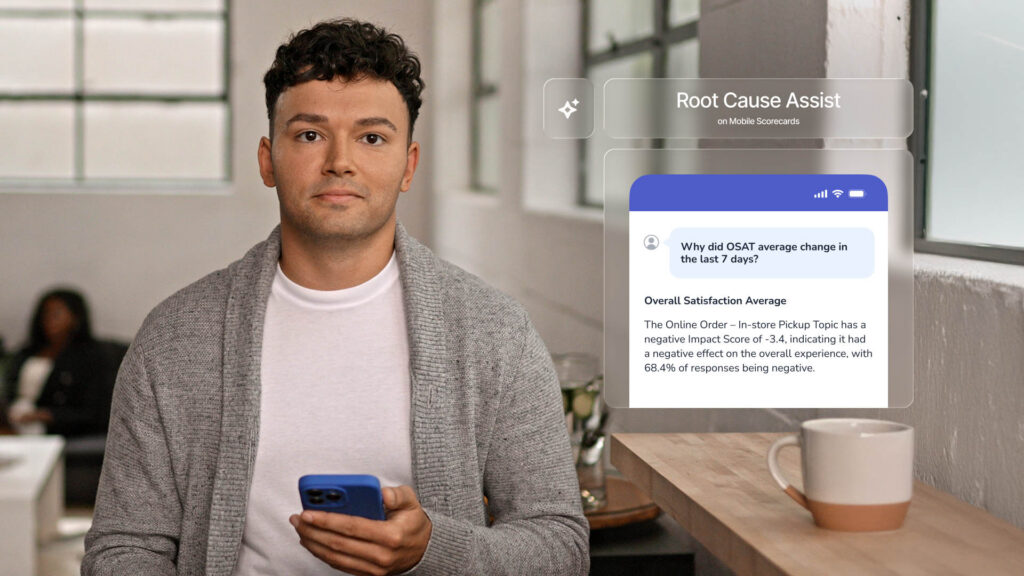

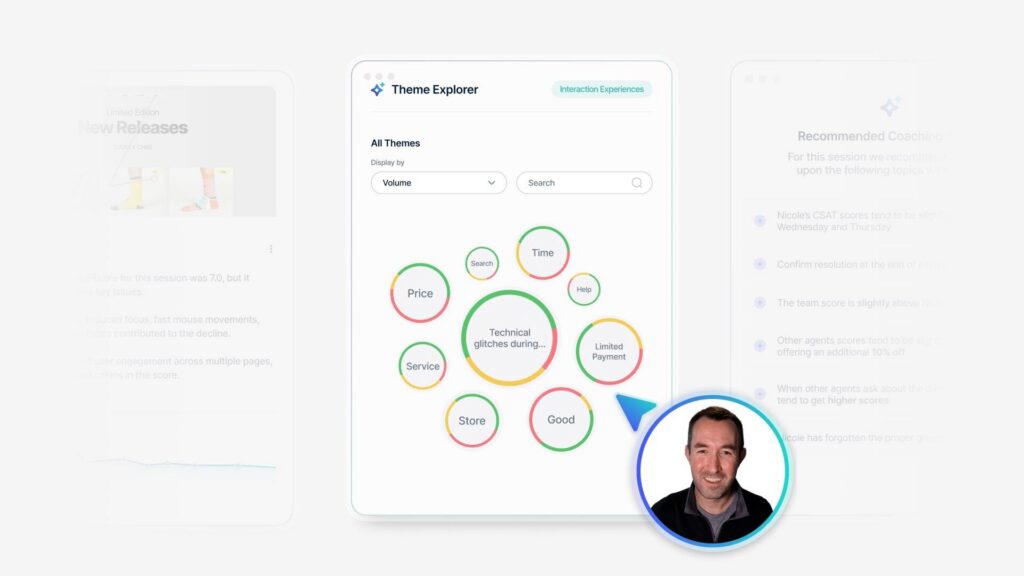

So it’s not like you’re in there, you can do session replay. We saw that this morning, but you can also just have aggregated across a whole slew of these. What happened, and if you aren’t familiar with Adalia Digital Experience Analytics, which we have a lovely booth that I highly recommend you visit, it automatically scores experiences at the session and page level, and it indicates where users [00:21:00] are frustrated, where there might be technical issues and so forth.

So this particular screenshot shows you some of that. There’s a lot that you can learn about DXA. I’m not an expert, but it has some very cool features. Because these automated scores are driven by behavior. Again, it’s not what they say they’re going to do, it’s what they’re going to do. So for example, if we look at the one that’s going up, that’s not something we want going up.

That’s frustration. And some of the good things like engagement are going down. And when we look at frustration, it captures behaviors that indicate a negative user experience. So it might be unresponsive clicks. If I just click this button five more times, it’s bound to work. Or you’re doing one of these.

With your click, or if you’ve got your mobile phone, you’re like, maybe if I turn it this way. So things that you don’t even realize you’re doing, we capture that and we code that in a way, so we know that’s causing frustration. So again, this doesn’t just tap into the silent majority, that doesn’t take our surveys, it gives you insights that no survey can possibly [00:22:00] capture, because I’m not gonna think to tell you, I clicked five times on the button and it didn’t work.

And if I, even if I did say that, you’re not gonna know what page I was talking about. DXA can also reveal the negative impact of digital friction on the business when we get into that ROI discussion. So this report is showing you potential revenue loss due to poor experiences on specific pages. So any of you who are familiar with our reports, read bad, right?

And more read more bad. So in this case, we can see. That this is actually an open a checking account page. Now, if I’m a bank and I know I have at least one bank customer in here, that’s not a happy thing. We want them to be able to do that. And the other thing that starts to happen is that this has an impact on your contact center.

I wanted to get this done digitally, right? I went online. I don’t wanna have to actually talk to a human being, but in this case, we see that only a third of the folks could accomplish what they set out to do. On that [00:23:00] online site. So where do they go? The only recourse they have, they might try to self-serve with the chat bot, but ultimately they’re gonna land in your contact center.

And that is expensive. So we start to see how digital can have a negative impact on our contact center. So let’s look at a case study from a Medallia client. And this one is pretty interesting in that it’s a sort of unique organization. It’s gaming. So this is a company that provides platforms for your government sponsored lotteries and sports betting.

They were having a problem. Many of us have low customer engagement. The scores weren’t where they wanted to be, and they deployed digital analytics across their state lottery clients to hear what was happening with the steps along the journey. So one pain point they uncovered was after a very common thing, they decided to update their app.

But what happened was they got this 10 times spike in errors with the app, and they found that within two hours. And so what they could do is within 24 [00:24:00] hours they could fix it and re-release it. And as I’m sure that can be, that’s a pretty incredible outcome for anything happening on digital.

And another pain point they had was that they had 22% of their gaming customers wanted to register their accounts online and couldn’t complete registration by fixing this one experience, this one problem, the company saved $8 million a year. So digital can have a profound impact. Pretty quickly, let’s look at conversational.

Most companies have a. Contact center of some sort, and the common goal we hear both from our lead, our contact center leaders in our C-suite, we need to reduce call volume. I’m sure you’ve all heard that a million times, but we also don’t want to inadvertently mess up the agent quality scores. We have to really have a careful balance there.

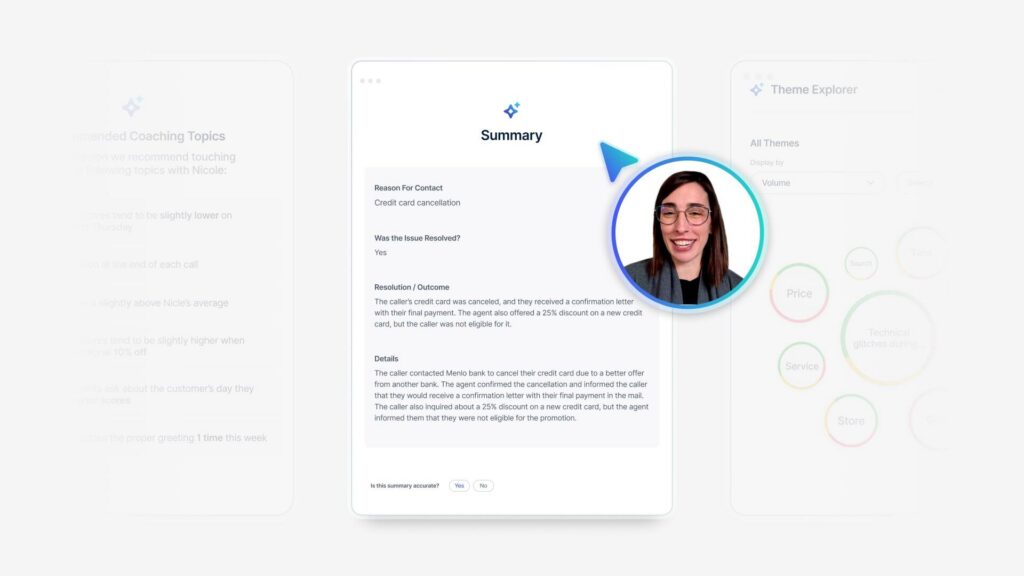

So the longstanding practice is to send that post service survey after whatever that interaction was that happened in the contact center where our forward looking companies. Are doing that, yes. But they’re [00:25:00] bringing in agent notes. They’re bringing in the calls in the form of speech analytics, which captures a hundred percent of calls.

And they also are probably bringing in chat. They may be bringing in emails and they’re able to uncover the root cause of customer pain and reasons for unresolved support issues through speech and text analytics. And rather than just capturing how the customer feels about that agent, yes, we wanna know that you’re getting at that broader experience.

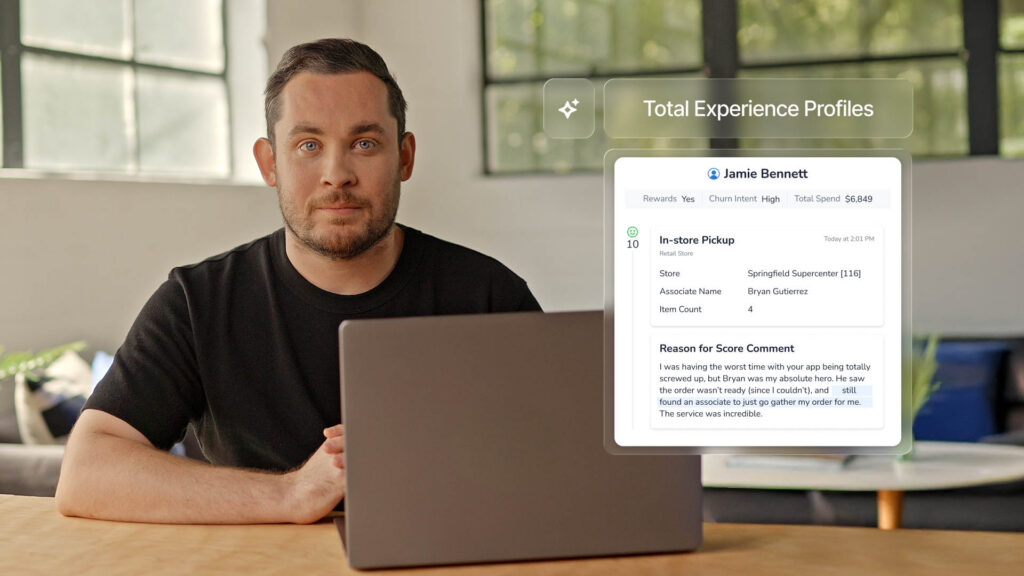

And what I wanna show you here is there’s four of those sort of dashboardy things on the top. Two of them look very familiar to you, probably Net Promoter Score, first Call resolution. Things we’re very familiar with. Two of them are automated, thanks to bringing in those additional insights. One is called the customer experience score, and that automatically measures how well an interaction went across.

Everything that’s been ingested from the contact center, the CX risk flag is something you would set. It’s a custom metric. And it’s when an interaction or a survey [00:26:00] response, hits some flag that indicates a predetermined risk. And it may be something like churn or spend is about to go down.

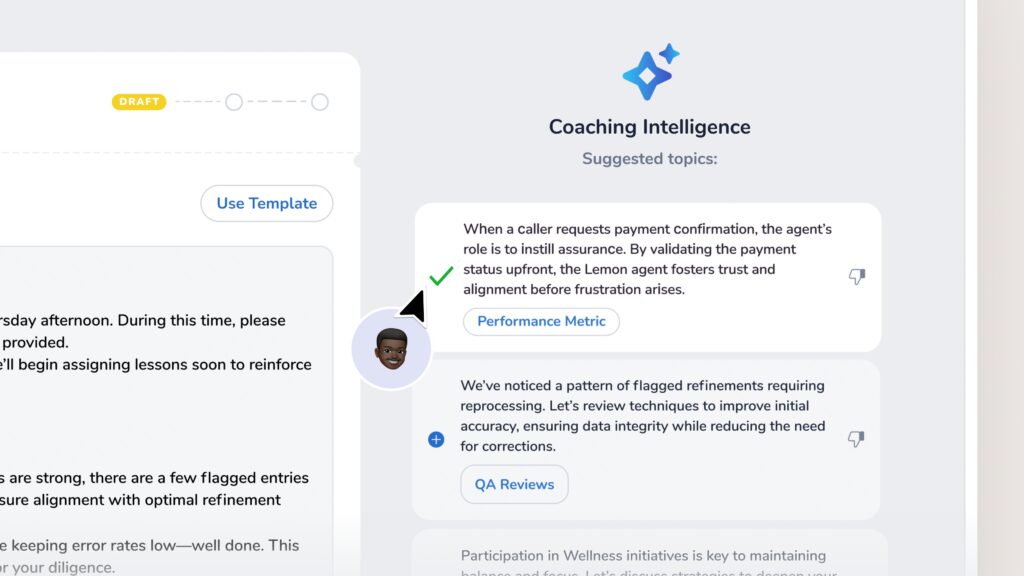

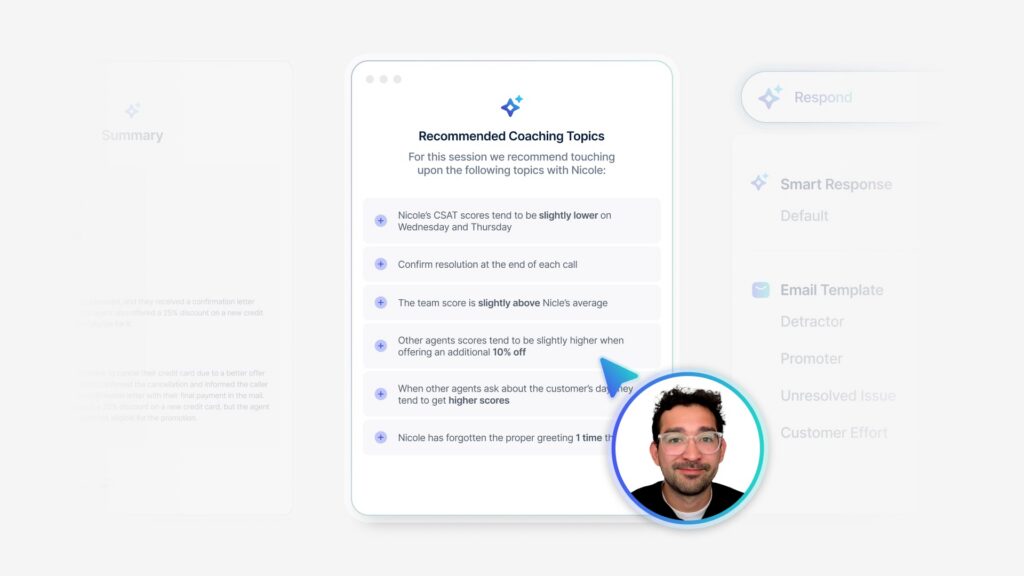

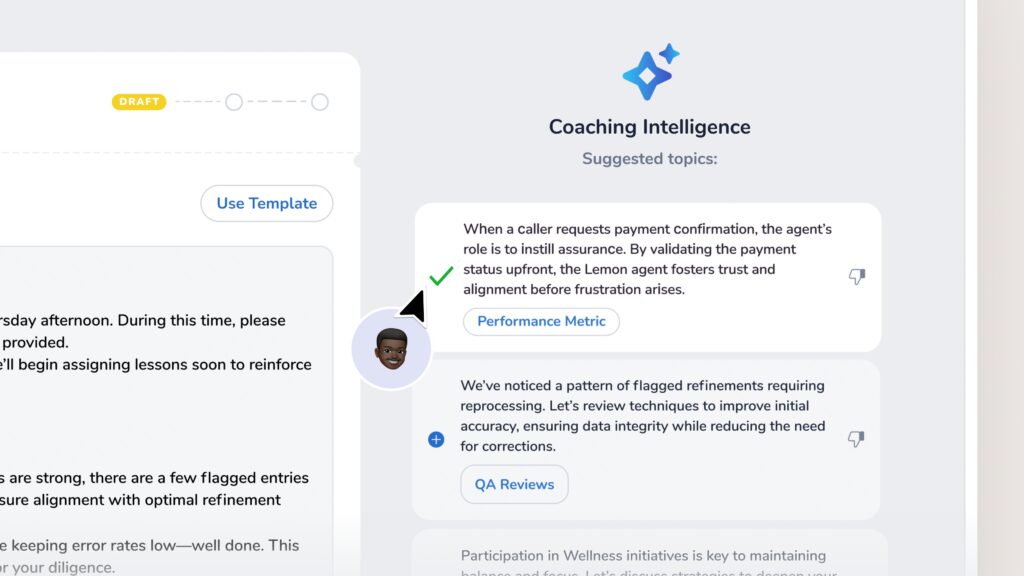

And we can see there’s of course also trending topics that are having either the positive or the negative impact. But again, we also wanna make sure we’re keeping an eye. On how our agents are doing, and it might be at the broad organizational level, it might be at the team or even the individual level.

And again, a hundred percent of calls are automatically scored for quality. I dunno how many of you are worked at a contact center or heard the pain of your contact center people. But listening manually to calls is incredibly time consuming. And instead, you can have your supervisors and managers focusing on coaching and improving call quality because now they’re not having to do all that listening and they can focus on the action part of things.

So really huge opportunity. The second charts here are showing some very interesting, again, automated findings. Looking at what was [00:27:00] that experience like on the phone? Was there an agent greeting? Was there clarity and politeness? Was there like really weird periods of silence and did they talk over each other?

And so that gets rated as well. And of course there’s also a lot of emotion that you can be getting, not just from the customer but from the agent. So one example that I wanna share comes from the world of banking. And this was a regional bank that used speech analytics to reduce costs and improve the customer experience.

So this bank wanted to learn more about, what’s driving our customers, how do we deepen our relationships and accelerate issue resolution. So they got around 1.2 million calls a year. And again, they used speech analytics to do some automatic dispositioning or assigning of reason codes, and it allowed them to see what every call was about.

And using speech analytics and the sophisticated AI that we have, they uncovered insights that allowed them to make changes that resulted in millions of dollars in cost savings as well as a [00:28:00] 20% improvement in first call resolution. And when you try to move first call resolution, that can be a nasty metric.

So pretty impressive results. Now I have one final example. This is the big kahuna. This is the holy grail. We have up here, the digital channel. The social channel, conversational customer, conversational agent. Oh my goodness. You can see what’s driving things. Top scores, bottom scores. You can have a field day.

And so one of the things that’s interesting here is you start to look at the scores. You can see well. What am I hearing? Maybe universally, and we see some of that frustration that’s coming through in digital, particularly around malfunctions. And search is hitting every channel. So that’s telling you, wow, this is something pretty serious.

And there’s obviously other things you can do now, again, not day one. This is further down the road and something you can aspire to. So I just wanted to show you what’s possible. But to get there, we have to, one bite at a time. So let’s just review a few of the things for you to [00:29:00] think about as you go back and start having conversations about this.

’cause we want to be bringing these valuable data sources together and want you to all to be successful. Number one is engage key stakeholders. We cannot do this work alone. And to engage key stakeholders, you have to speak their language. Sometimes the customer experience thing doesn’t really work for them.

It’s not customer experience for customer experience sake, it’s we need to improve how our agents are doing. We need to reduce call volume. We need to shorten average handle time. When you start talking about things that they’re measured on, you get their ear and what’s great is not only will you then be improving the contact center metrics, you’re bound to be fixing the customer experience as well.

To get to root cause, which is ultimately where we’re trying to go. We have to make sure we identify the right new signals just because our customers are talking to us everywhere. We’re not prepared to deal with everywhere just yet. You know what? We may wanna think about what we [00:30:00] hear in the contact center, but you probably are better served by digital insights and again, we have to think about getting these in because ai incredibly powerful and what fuels it is data. So the more data you bring in, the better your results will be. They’re going to be more accurate, and they’re going to better reflect what’s happening. So you need to determine which signals are we going to start with.

That’s step one. Then where are they? I touched on this briefly. We know they probably aren’t just easily accessible on the SharePoint. You probably have to reach out to the contact center if that’s the case and say, where does this live? And now you’ve got it involved and maybe you’re getting a little bit overwhelmed and Medallia’s here to help you.

We do this all the time, so bringing that data in, finding it, helping you figure out what’s needed, you bring some technical folks from your side, will bring some technical folks from our side. It will not be Steve or I, and they will help you figure out how to bring all that data together and get it in ultimately.

We do [00:31:00] this because we wanna harness the power of Medallia. That’s where the beauty happens. As we identify these sources and bring them in, and we use our sophisticated ai, we start finding things that are completely unexpected. And the beauty of things like the automation, the AI, is you could never do this yourself.

It’s thousands or millions of data points. We just don’t have the brain capacity to manage that. And you can learn about really cool things like what was their intention? What, how much effort did it take? What was the emotion that they were feeling? So really humanizing it because ultimately what we wanna be able to do is to drive action with this data.

We’re not just collecting data for data’s sake, and by doing this. We’re meeting our business’ objectives and we’re improving the customer experience. Steve and I would like to thank you all. We’d like to open for questions momentarily, but first there’s more. If you [00:32:00] have not gotten enough today, a couple of things that may be helpful to you.

If you have more questions, there’s going to be a text analytics 2 0 1 happening tomorrow. If you wanna hear a little bit more about that. 1 0 1 is taking place right after this. If you’re like not ready for 2 0 1 yet, start with 1 0 1. There’s also a session tomorrow with some of our clients. It’s a Mayo Clinic and Xcel Energy talking about digital.

So if you wanna really hear from some businesses and maybe pick their brain about how they got there. And finally, that product hub, if you’re like, I am intrigued by digital, they’re the folks to go to, they’re the experts. They can walk you through demos. And show you everything you need to know. So with that, we are open for questions.

Q+A (2): So one of my questions was, you were talking about how you can use the AI to look at agent notes as well as the interaction that is happening in the over the phone. How does the system cleanly mitigate the difference between agent [00:33:00] perception and what’s actually being heard on the phone?

Jen Rodstrom: Great question. I’ll tell you one thing that I’ve learned. Yeah. And then Steve, I’ll see with text analytics, what I love is you can sort and I can say, do I want to hear the agent? Do I wanna hear the customer or do I wanna hear both? I. And by here really read, ’cause the speech gets, but you literally get an entire transcript of that conversation.

So you can, if you’re like, I don’t really know what’s happening here. You can read through it and it’s also flagged. So let’s say it was a five minute call, that’s a fair amount of conversation. It highlights for you with our usual red and green, what’s positive, what’s negative. And so oftentimes you’ll hear.

I’m so frustrated from the customer and then the agent perhaps in agent notes, right? The customer’s very frustrated. And so I think there can be some of that friction of they’re not completely aligned, but that’s where it can be helpful to not just have agent notes because that may be their perception versus what actually happened during the call.

Does that at all help to [00:34:00] answer it?

Q+A (2): It does a little bit, I think the secondary part of that is. What’s happening to be able to train the AI to do what it needs to do, as well as how are you surfacing those discrepancies between the two places so that you can then effectively coach the agent?

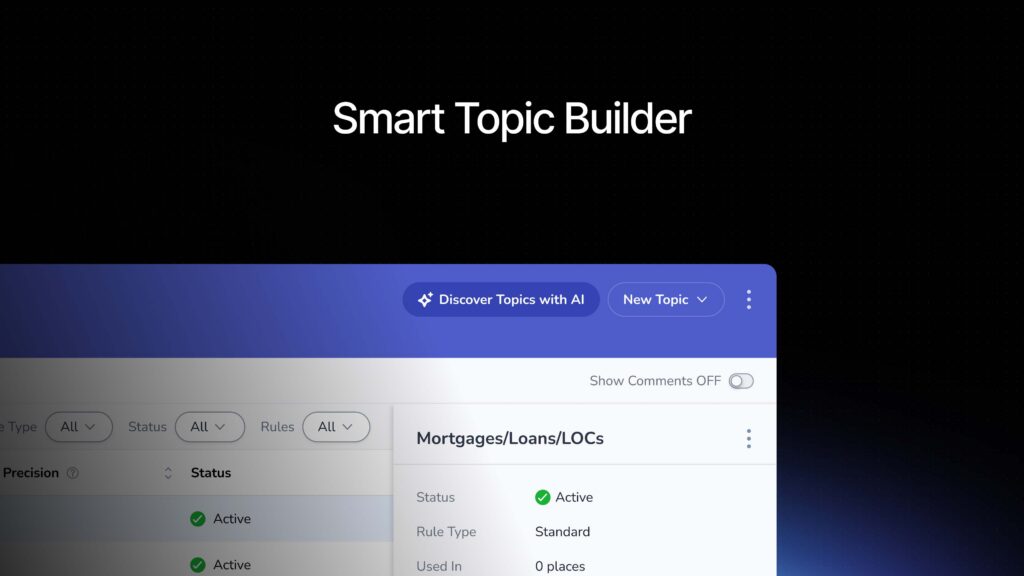

Steve Loyka: Sure. Are using text analytics at all today. For any of your unstructured data in your surveys. Yes. So it’s a similar process, right? It’s gonna parse the the natural language that’s coming through the voice. We’re transcribing that, parsing it out, identifying keywords that are associated with topics that you’re already scanning for, right?

The example earlier about the banking, right? So let’s say you have certain things that an agent has to say around that ensure that they’re incomplete. Clients, right? With certain rules and regulations, we can make sure that they’re adhering to those. We can also identify things that are associated with the key topics, the sort of the emerging topics and themes that are associated with your topics that you’re already scanning for and your other surveys, and make sure that we’re connecting [00:35:00] those pieces as well.

And yeah,

Jen Rodstrom: I’d say if you want some more, if you can make the 1 0 1 or 2 1 1. Those might be able to even answer better than we can. So thank you. Thank you. Anyone else? Any questions? I know we hit the post-lunch coma time you’re, you don’t have to, but if, oh, I see one here in the center. Oh.

We got the runner from this side. Got it.

Q+A (3): We’re doing great. Thank you. Thank you all for this presentation that was really insightful and loved getting the walkthrough on all of it. The outer loop component of this omnichannel makes a ton of sense. It’s obviously aggregating data across a ton of different channels and you’re able to see where pain points are coming through, which is again fantastic to see.

I’m curious, as you’re thinking through this omnichannel scope, what the inner loop. Interventions might look like and where that more like one-to-one interaction, how to improve this single customer’s experience rather than holistic might show up.

Steve Loyka: Yeah, I think particularly in the digital channel, right?

There are opportunities to, as in the example with Joe trying to [00:36:00] complete something in a mobile or on a portal environment. Being able to identify and flag where they’re struggling in the moment and interdict. Now it’s obviously gonna depend on what’s resources do you have identified to support that inter loop process.

But there are opportunities to engage that individual so they don’t abandon the process that they’re already in. And meeting them in that. Place where the experience is being lived in that moment and assisting them in overcoming those obstacles. And so there are opportunities to alert to those struggles, whether it be the rage clicking we talked about, right?

Technical issues where we’re circling back on pages. And we’re able to be able to flag for those and identify those and be able to support the inner loop inside of the experience rather than after the fact, after they’ve abandoned.

Jen Rodstrom: And you can, of course, just like you can with your surveys, flag keywords.

So even if if you don’t have a score, I know a lot of times we might say if the score is X or below, that triggers an alert. If we hear these words or terms that might trigger an alert. So you can customize it that way. And again, [00:37:00] it’s great to set up all these alerts, but you gotta make sure you have actually a.

Humans. Yeah. Who are prepared. We’re not at the bot part yet where our bots can be doing this for us. So you actually have to have humans available to follow up as appropriate.

Steve Loyka: The integration also of the digital behavior observation and the things that you might be using already in a digital environment like interdiction inter digital intercepts, right?

So if you’re identifying someone struggling with a particular piece of a workflow, right? Being able to say, do you need help? Can someone reach out to you? Do you need someone to call you and support you in this in. Completing this process, we can do those things as well. So combining both the digital intercepts that you may be using already in your digital channels to understand what’s what, whether someone was able to accomplish the tasks they set out to, how difficult it was.

We can integrate the two of those to be able to do something in the moment for that individual.

Q+A (3): Y’all are giving a lot of credit to the speed of my team and I really appreciate that.

Jen Rodstrom: All right. Thank you everybody. Steve, and I’ll be loitering for a little bit if you have any questions. I know text 1 0 1 is gonna be in here next. If you wanna learn some more about text [00:38:00] analytics and thank you so much for joining us.