Jason Reilly: [00:00:00] Hey everybody and welcome. Thank you for joining us today. We’re very excited to have you guys here. We’re gonna be doing our designing Impactful Surveys, masterclass, so we’re very much looking forward to having you guys to with us today. So my name’s Jason Reilly. I’m a senior manager with the Medallia Strategy and Analytics team.

I’ve been in the industry for 22 years. I also teach survey Designs, statistics, and our programming at Syracuse University. Joining me today is Tami.

Tami Salmi: Hi everyone. My name’s Tami Salmi. I lead Medallia’s research services team. I’ve been with the company for six years now. I am a self-proclaimed data-driven nerd love designing surveys, and really excited to chat with you guys today.

Jason Reilly: To be fair, we’re gonna be covering a lot of content today. We’re taking you from survey Design 1 0 1. All the way here at the top all the way to survey Design 2 0 1. So we’re gonna be trying to cram two semesters worth of content into 1 45 minute session. I might go a little fast as a note, if any of you guys [00:01:00] have a question.

I’m talking, don’t hesitate to scream, don’t hesitate to throw something. Sometimes it’s the only way to get my attention. As a note, there is going to be a surprise quiz at the end of this class. People in the front are getting bonus points if that’s gonna change your decision on where to sit. Just as a quick FYI No, I’m kidding about that.

Alright, so we’re covering a couple of different topics today. Some of what we’re gonna be talking about is really helping you to answer overall three questions, right? One. Are you identifying what matters? Do you have intent in your survey design? Are you looking at your survey as an opportunity to ask actual, meaningful and impactful questions?

Two. Are you doing the best that you can to capture signal and survey? We talked about this a little bit already at the keynote, right? How do you combine your survey signals with operational data, the structured and unstructured? How do you bring that together? And then three, [00:02:00] how do you take those signals and action them?

How do you turn what you’re gathering, what you’re checking, what you’re asking about, and turning that into a meaningful and actionable setup. First question for you. Who here knows why they build a survey? Raise your hand if you know why you’re building your survey. What action are you trying to influence?

What behavior? What ROI metric? Does anyone know how their survey as it currently exists, how it influences your business? Does any, is anyone able to answer that question right now? Okay, you’re in the right place. Alright, so when you’re building a survey, the first talk about why we talk about intent is because what do you wanna do with the information?

You can make a survey for no purpose, right? You can put it together, a bunch of questions. Hey, what’s your favorite type of dog? What’s your favorite cat? Feel free, it’s not gonna get you anywhere. And we talk [00:03:00] about survey, exhaustion. I. People are getting so many surveys, so all the time that they don’t know what to do with them all.

They don’t want to answer any more questions, they’re done. So when you build a survey, you have to ask yourself, what do you wanna do with this information? How does this tie to a business metric? How do I know if I’m getting a five out of five? On an OAT survey that’s going to lead to repeat business or that’s going to lead to future stays, or that’s going to lead to new orders, or that’s gonna lead to new revenue, right?

How does that tie together? These are the questions you need to start asking yourself now. Next poll for you guys, raise your hand if you’re using NPS or LTR. Okay. Nice. Okay, keep your hands up. How many now? Raise your hand if you’re using asap. Okay, perfect. And raise your hand if you’re using effort or [00:04:00] CES.

Okay. New show of hands, how many of you are using more than one? Okay, perfect. So what you’re looking at here with these questions is they all have different things that they’re trying to track, which means if you’re using more than one, your survey lacks intent. You don’t know which way your survey is going to drive.

Your business, because when you ask these type of what we call dependent variable questions, N-P-S-L-T-R, oat CES, when you ask these questions on the same survey, the majority of the time your customers are gonna answer them very closely together, especially if they’re back to back. You start with how likely are you to recommend.

My company, how likely are you to recommend Bob who helped you with whatever problem? How satisfied were you with what Bob did? So if I’m sitting there and I’m saying, Hey, I’m going to tell Bob [00:05:00] that I was very satisfied with his help, then my logical conclusion is that I’m probably gonna recommend Bob.

That’s my thought process. That’s how I’m gonna work through the survey flow. Even if that question comes later, that’s what I’m gonna do. One of the key takeaways I want you to remember. From this masterclass is that if you are taking a D pen variable, take one. Understand what you’re asking about and why.

Do you care about effort? Do you care about satisfaction and building good experiences? Do you care if the person is actually going to promote your brand or talk to you, to their friends and family? Those are different questions, and it’s completely okay to care about all three. Build three separate surveys.

If you want all three. Build three separate ones. If you want one, just build one. Okay, so I talked about survey flow a little bit. Why does it matter? So as I said, when you have [00:06:00] your overall metric or dependent variable, this is core to the CX question you’re trying to answer. So when you’re looking at this and you care about NPS, that’s gonna be your first question.

How likely are you to recommend and then we’ll convert to the NPS scale afterwards. You wanna have an overall metric open-ended question as well, just because that puts a little context around it. We talked about unstructured data already. This is an example of that. Unstructured data. In general, your key focus is to keep your survey short.

You don’t necessarily want to have 3D pen variables. With three subsets of KPIs, with three subsets of drivers, that’s too long. That’s what I like to call a kitchen sink survey. I’m asking about everything, every possible metric, every possible touch point, every possible distraction that could have occurred during my customer’s experience.

I want to ask about, because I want to have every question represented that’s going to. [00:07:00] Hurt your response rate. It’s gonna hurt your complete rate. It’s gonna hurt people’s engagement and it’s gonna over the long term, reduce the number of people willing to take your survey. I’ve seen a lot of kitchen sink surveys in my time, and nine times out of 10 when I go through them and I’m trying to analyze them, which I’ll talk about a little bit towards the end, when I go through and I try to analyze them, I end up having to drop so much out of it.

So few people responded to this, I can’t give you an answer on this. They skipped it. It wasn’t something that they cared about. So show versus tell. So if I’m telling you to cut questions out, how do you track this information? So a great example of this is a HT. How many people here have call center operations?

Okay, good. How many of you guys care about lowering a HT? Couple of you. Okay, good. Alright, so you can ask a survey question. You could set up a survey question that says How satisfied were you with the length [00:08:00] of time it took to resolve your issue today? Okay. Classic survey question. Most of you guys who have call center operations should be seeing something like that or have probably seen something like that in your careers.

Nothing wrong with that. Perfect. How many of you guys would also then want to add another question? Maybe, Hey, do you feel that your issue was resolved? Was your issue resolved today? So one of the things that happens is that when you look at this through a linear regression model or some other kind of regression model and you control for issue resolution, then the length of time no longer matters, and you can see that people who have their issue resolved.

Don’t care about the length of time it took to resolve the issue, and people who did not have their issue resolved absolutely care about the amount of time they spend on the phone. And we were able to quantify that in an interesting case study, the notes of which we’ll be in the PDF After this after the conference, what we were able to see is that we could have calls that were 15, [00:09:00] 16, 17 minutes long, which according to the client’s.

Best in class expectation was double the length of time of what an agent should have taken, but the issue was resolved and the comments were like, wow. The agent was so quick, the agent was so fast. I’ve never had a better call experience in my life. Issue resolved. Issue not resolved. Call times, four minutes, five minutes.

This was the longest call of my life. Time after all is relative, right? So when you’re looking at. Your data, it’s important to say, okay, what can we quantify about the experience so we don’t have to ask about that? Can we look at a HT? Can we look at how an agent is approaching their tools and utilizing them?

Can we see what the customer was calling about? Can we tell from an operational perspective, is it fixed afterwards? So for example, like a cable company, they’re calling because their service is out. Can we see that service has been [00:10:00] restored after the call? That is an objective measure of issue resolution.

The time it took for the agent to get that delivered is an objective measure of the time it took to get the issue resolved, which allows us to then have conversations about goal setting. How much time do we actually wanna spend on the phone talking about, lack of tv, lack of internet, whatever for the cable case.

All of those things matter. Don’t be afraid to break barriers down and silos down in your organizations. This is just a basic data set of things that I’ve personally worked with or seen. Some of this is Medallia, DXA, for example, should be something you guys recognize. Some of this isn’t like MCIF files, but this is all stuff that I’ve worked with and sometimes I didn’t have direct access to it.

I had to go talk to somebody. I had to say, Hey, I need to get some access to some data that you have, because I’m trying to make things better. Don’t be afraid to have those conversations. Silos are your worst enemy. If all you [00:11:00] have access to is your survey data, you guys just saw it at the keynote, you’re missing 90% of your signal.

There’s so much more out there. You guys have all of this. I can guarantee you this data or some version of it exists in your organizations. If you are looking to understand the signals and this customer experience, this is data that you should be looking for. This is data that you should be absolutely be wanting to find.

Because when you pull this into your surveys, it’s gonna make them stronger and it’s gonna make them better. I’m gonna turn it over to Tami.

Tami Salmi: Perfect. So we’re gonna talk about some of the little details now. So the first one’s gonna be, should I put all of my questions on one page or should I break it up over multiple pages?

And this is all gonna be about user experience. We remember our designing surveys for the people that take them. So we want you to think about this. Ask yourself, how important is it that I need to keep respondents really focused on a clean interface, right? I want them focused only on one or two [00:12:00] questions at a time.

I. Maybe, as you saw, we have some logical sections to our survey. We should break it up because of that. Maybe if we put it all on one page, it’s gonna feel really overwhelming to someone. The end goal here is to create the user experience that we desire, so people are engaged with the survey. And for us, we generally recommend multiple pages, but I will say do not take that to mean that we recommend long surveys.

We still want you to be focused on having short surveys that are really smart. I. Next flex question, what this means. You can see an example on the screen is simply just asking someone, do you have time to answer a few more questions? Now, the reason that we do this is because we see abandonment issues.

People are dropping off the survey in the middle. So how we can guide you is to say, Hey, what questions are really critical? What questions are nice to have? And then we plant the flex after [00:13:00] those critical questions. That allows the respondent, it empowers them to say, yeah, I’ll give you two more minutes.

I’ll give you the more time I can answer those. Nice to have questions. The really fun thing about this is it’s super easy to test. You can implement this. All you’re looking for is an increase in response rates in the critical questions, and you can decide whether this was a good ad or not great ad if we’re not getting the value out of those increased response rates.

Okay, required questions. Now, I think the interesting thing here is that we can create some frustration when we’re requiring questions. We don’t want to do that. Our general recommendation is to, of course, again, focus on the critical ones, require those, but ultimately, if we require everything, we can increase abandonment rates, which we don’t wanna do.

So those nice to have questions. Keep ’em optional. Keep ’em optional. Now, along the same lines, people always ask should [00:14:00] I have an na? Should I offer a don’t know option? So some of my survey questions, my drivers, the alternative is just allowing people to skip a question, so we don’t necessarily have to have that added column.

Now we again are data lovers, so we are here to tell you that our main objective here is to have quality data. And in order to do that, we need respondents to have a way to tell us that a question doesn’t apply to them. The easiest way for us to do that, the most obvious way to do that is to have an NA or have a don’t know if we want to think about that alternative, that skip.

In this case, we’re gonna be relying on some instruction texts. They might be moving fast, too fast. They’re gonna read, write over it. What we never want is to have someone answer a question that doesn’t apply ’cause that’s gonna jeopardize our data quality. So again, the recommendation here is have that na, have that don’t know.

Alright, you’re all CX and ex [00:15:00] experts. You guys know that it’s important to follow up with customers, follow up with employees, close the loop, right? That’s how we learn, that’s how we improve. That’s how we build trust with our customers. The reality is we do recommend asking for permission to contact. The reason is that we want respondents to know that we respect their privacy.

We want to make sure that if we’re collecting phone numbers, if we’re collecting email addresses, be transparent. Tell ’em what we’re gonna do with that information. Ultimately, we wanna set the expectation that we’re not necessarily going to contact you, right? We are just saying, do we have that permission?

I cannot emphasize enough the importance of survey design when we have a survey. That is well designed with a very nice, clean layout. We are going to get respondents really focused on questions. When they’re focused, they’re going to answer them more. Honestly, they’re going to answer them [00:16:00] with better intent, right?

They’re gonna provide that thoughtful feedback. Design should not be overlooked, and I think a lot of you in this room may already have a survey program. And if that’s the case and you haven’t touched it in two years, ’cause the survey’s working, the data’s working. That’s great. Please don’t overlook survey design.

This is what’s gonna get people engaged. And remember, this is an extension of your brand, right? This is when we call our friends in marketing and say, Hey, help us. Help us get that perfect email invitation. Help us. Design this survey, put some fancy graphics in it, right? We can do so much. The ability at Medallia to make a really nice sleek survey is there, so the reality is this is important because we can get people to focus on the questions and we’re getting better quality data out of this.

All right. Now we’ve talked about some of those big pieces of the overall story design. We’re gonna focus now on question design.

Jason Reilly: Yes. [00:17:00] Thank you. So I’m going to jump in here really quickly. There’s a couple of things that are near and dear to my heart based on questions and feedback that I’ve been getting from clients like you.

So the first question that always comes up is scale design, right? Zero to 10, 11 point. One to five, one to seven. In general, these are all the same. These are what are called pseudo continuous like heart scales basically. They’re great. They work, they’ve worked for many years. Are these necessarily going to be any better?

Going from a five point to an 11 point? Yes, you might see a little bit more improvement in your signal. That being said, if you have five point scales, that’s great. They work too. There’s lots of studies that have been done that show that they work, that they’re engaged and people understand them, which is critical and important.

People work with them. Really, at the end of the day, what matters is it consistent. A lot of social survey data, [00:18:00] for example, comes across as five point scales. So if you’re going to be seeing social media as a critical component of your CX strategy, then yes, you absolutely want to probably keep five point scales consistent throughout because we’ll keep it consistent.

It’ll make it easier for you to analyze and compare between the different scale periods. That being said, this is really an art, not necessarily a science here. If you do need to have multiple different scales, it’s okay. The main thing is that when you are doing them, don’t just put the numbers out there.

At least try to explain a little bit about what the numbers mean to you. It’s important that the person taking the survey understands how you are interpreting it. What is a zero? What is a one? What is a five? What is a 10? This is especially true if you have what’s called a bipolar scale. You have a zero, you have a neutral, and then you have a 10.

And that’s like highly, satisfied versus highly unsatisfied. So you have that range. So it’s important to make sure you’re providing some [00:19:00] text around here. Also, another critical thing, one aspect of anchors that we have seen is that they do improve response rate. When people have a better understanding of what you’re actually asking for and how to interpret it, they can better align it with their own experience and give you that experience information.

The final major note that I want to make sure is included here, no images, no emojis, no color. Okay? So generally from a design perspective or from a marketing perspective, your colors are important. That’s all critical, and you guys like it and you’re engaged with it, and I understand. Culturally. However, when you’re including things like red or green, people are gonna interpret red equals bad.

Red equals stop. Red equals danger. Red equals warning. Green equals good. Green equals money. Who doesn’t like that? Green equals happy green equals go right when you’re driving, do you prefer to see green lights or red lights? Same thing when you put colors on or you [00:20:00] put emoji symbols like smiley faces and frowny faces, or you put beautiful images of your retail store environment in perfect condition, you are influencing how the survey is being responded to.

You are replacing the imprecise memory that the person has of their experience with a pseudo memory of a perfect experience. Also, avoid push language. Bob would love to hear about how much you loved his service to you when you were in the store, stuff like that. Lisa would love to get feedback on how she could be a better agent for you.

When you do that kind of thing, you might think you’re personifying it. But you’re not, you’re pushing people towards a specific type of answer. You’re pushing them to be like, oh, I actually, I don’t hate Lisa. I don’t wanna get her in trouble. So I’ll say something nice about her so that no one will get her in trouble, right?

And or you might not take the survey at all. I don’t feel comfortable with this. I don’t [00:21:00] want to do this. It’s okay to respond to a person. If a person gives you a 10, it’s okay for the follow up question to say something like, Hey, we’re happy that you’re happy. Can you tell us what happened? That’s fine.

You can always respond to what your customers are telling you. Don’t tell them how to respond. I. That’s the critical differentiation here. Alright, sorry Tami for interrupting. I’m gonna hand it back to you now.

Tami Salmi: Oh, all good. We get to talk about the fun stuff now. These are features that are available today that you can put in your surveys that are gonna help drive that engagement.

And remember, engagement means we’re getting better quality data. We have people that are being thoughtful. So we have in-page conditioning, Jason just described this, what we’re doing. Is we’re changing the text of a survey question based on the prior response. So you can see the example. They’re a detractor.

They’re giving us that negative score, so we’re changing the follow-up comment box to tell us just something a [00:22:00] little differently. We’re personalizing that experience. I I have a joke that I wanna just share. Hey, Jason. Jason, how are surveys, like marriages, how they ask a lot of questions and they never listen to the answers.

It’s

Jason Reilly: very true.

Tami Salmi: The reality is when we are able to change the text box, rather than saying, please give us the reason for your score, right? That’s the most common one. It’s very different than saying, we’re thrilled. You’ve had a great experience. Tell us what you enjoyed most. That feels more personal.

That’s gonna invite people to give us more thoughtful responses. Okay, the next one, comment boost. Does anyone have this implemented? Yay. I see some hands. This is exactly what it’s described as, right? We’re trying to encourage longer comments. The reality is that’s gonna give us richer information. Now we’re gonna get more insights from the data.

Comment. Boost is awesome because [00:23:00] it’s that probe. You’re talking with a friend, right? And they’re saying, Hey, tell me more. That’s exactly what this is doing. It’s mimicking that experience by providing all of these little tiny tweaks that are, by the way, completely customizable. You can make those things say whatever you want, so this is really easy to implement.

It’s also available in multiple languages, and you can have this done today. Okay, so you’ve designed the survey. We’re talking about that time to go launch it, pause, please pause the, I just want edge, just to encourage everyone to consider testing your survey. What do I mean by test? Go talk to some customers.

Maybe you have some friendly ones that they can just run the survey by them, have them take it, give you their feedback, because guess what? What’s important to you might not be important to them. And I wanna show you an example of this. We worked with a company that manufactures uniforms, and when I say that, they also rent them out.

So you can wear the [00:24:00] uniform, you put it in a basket, they come pick it up, they launder it, they bring it back. Their product team thought really hard about this list. What attributes are we gonna ask about our uniforms? Looks pretty comprehensive, right? This is a nice list. We went out and talked to 10 customers.

That was it. And this is the list that they came up with. And you can see two items matched. Two, just the brands and just the comfort. Everything else that the customer cared about wasn’t going to be in our survey. We learned this by talking to the customers, so as you think about getting the buy-in from your stakeholders, please don’t leave out the people taking the survey.

They can give you really great feedback to make that survey better. It’s 2025, and we’re still talking about using incentives, but just a quick reminder here. This can drive some response rates. Significant increases, yes, but [00:25:00] there’s a drawback. People may be after the reward. So we have to remember that we’re focused on quality data.

We want people that are there to give us thoughtful responses, not try to collect a promo code. There might be a way for us to have some alternatives. Strike a balance, right? Maybe we’re gonna have a raffle. Maybe it’s a donation to charity, but the concept is that we want you to take away the best practices of survey design so that you don’t have to use an incentive.

’cause if we have a survey that is well designed, that is short, that is smart. We’re going to be able to get that experience to get those completion rates, and we don’t need to use an incentive. All right. Now, we can’t talk about surveys without talking about response rates. We are designing surveys so that people will take them.

Right now, the end of the day, we assumed most people in this room already had a survey [00:26:00] program, so we wanted to just walk a little bit through how to think about response rates and what that means about your survey design. Now I can tell you if you’re here and you’re not happy with your response rates please remember that changing the survey isn’t the answer.

And I say that because response rates are the bottom of a funnel of a lot of health metrics. So when you think about survey health, we have to look at every piece of data available. What does that mean? We need to look at bounce rates. We’re sending all these email invitations out. How many are actually landing in the inbox?

Email open rates. That’s exactly what it’s described as, right? How many people clicked and open that email? The next one click through rate. This is when we’re seeing they’re in the inbox. You made it that far. They open the email. Did they click and start the survey? Did they give us that initial [00:27:00] rating?

And then we have response rates, right? And abandonment rates. How many people quit in the middle? And I wanna show you why changing the survey might not necessarily be the answer with an example. So we have the Medallia Benchmark ’cause it’s gonna guide us here. That’s gonna be that top row. And what you’re gonna see, I’ve got a couple of green boxes where I’m doing well, I’m doing, I’m performing better than the Medallia Benchmark, but even though my response rate is slightly under the Medallia benchmark.

Where my problem is the click through rate. So if this was your data and you’re sitting here and you’re gonna go change your survey design, guess what? It’s not gonna matter. That’s not gonna matter at all, because the problem is with the email invitation, the fix is the email. So please remember, if you have response rate problems, do not just go change your survey because it could be something else.

Quick plugs here if you don’t have benchmarks in your instance. Yes, these are free. [00:28:00] Anybody that opts ins participates, they can get ’em by the way, we can slice and dice them by industry. They’re available quarterly. This is something that everyone should have to get you benchmarks. And again, all that survey health data is in here.

Another shout out. This is Health Watch. Best Practice package. So if you do not know your survey health data, this is a pre-configured reporting dashboard, meaning it’s out of the box. We literally have it available. Your servicing team can plug it in. You’re gonna get all that survey health data we just saw, but even better, you’re gonna get file health data.

You’re also gonna be able to get really cool Medallia engagement data. How engaged are my users? Who’s using the platform? What pages of the platform are they going to? This is pretty, pretty awesome. And the last thing is, okay, I’m not saying that the, you don’t have to change the survey. We might have a response rate problem when you think about abandonment, [00:29:00] that there’s really cool module available in that package that’s gonna show you abandonment rate by page.

This is super simple, right? We want the data to drive the decisions. All we have to do is figure out where that drop off is happening. Go to that page, identify the problematic questions, make updates. Now, if you’re still having issues with response rates and our abandonment data is not helping us, I want you to pay a lot of attention to what Jason’s about to talk about.

We love data, right? And there’s a lot we can learn from the data of our existing surveys. That’s gonna help us decide how should we make decisions to change our survey design. We’re gonna pause before we get into the fun stuff, but I just wanna see if anyone’s brave enough to ask the first question here today,

Q+A: is there a risk to seeing too high of a response rate?

Should that tell you something? You’ve

Tami Salmi: got

Q+A: amazing customers.

Jason Reilly: Yeah, we’re [00:30:00] jealous.

Q+A: We’re rocking around like 21, 20 4%. And it made me a little bit nervous. Be like,

No, something funky going on. Okay. Thank you. You’re welcome.

Jason Reilly: Yeah. The only thing I would add to that is that you can always use Ace or the anti cheating engine just to make sure.

That there are no signals coming across in the survey’s responses, that it would indicate some kind of cheating or other issue. That would be the only thing. If you have as up and running, then I would say you should feel confident in those numbers.

Q+A (2): You said earlier that you should dump out driving questions that are correlated, which makes sense.

However

Q+A (3): is it homogenous across B2B and B2C, or are there any nuances? That you would recommend for a B2B customer using these tools? Is it do the same rules apply or do you have any stats on the metrics, right? Bounce rate, delivery, open rate, et cetera?

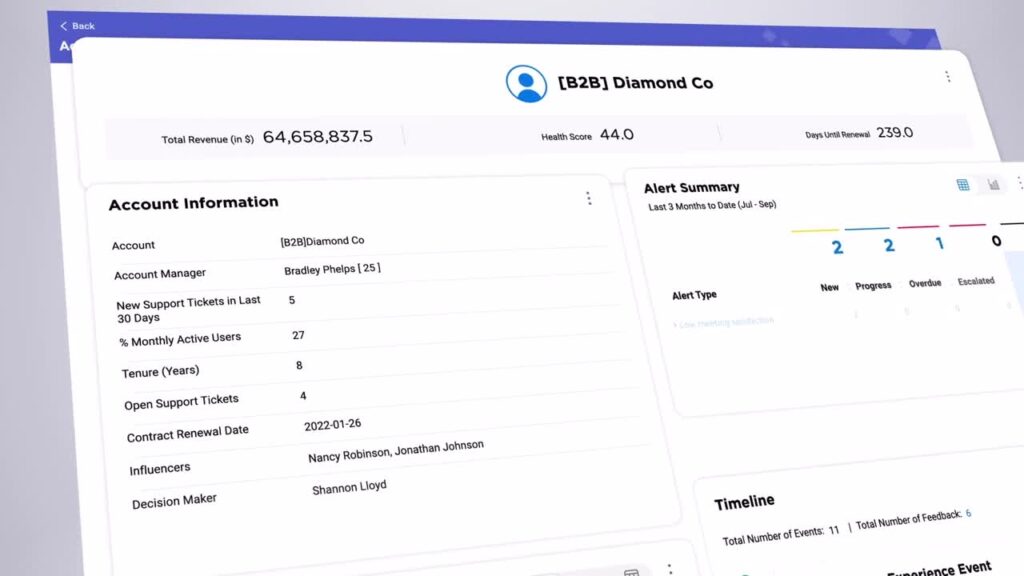

Tami Salmi: I think the same rules apply, but I will tell you that in general with B2B, we see [00:31:00] poor quality data, right?

We don’t have as great contact information. So there generally is lower response rates because we have high balance rates. So we do have, I think, more difficulty getting people to respond just by the nature of B2B, from experience. But again, we can talk more. But I would say that the rest of it does apply though.

Thank you. We’re gonna keep moving, but it’s not because we don’t wanna answer all your questions. We’re still gonna leave time at the end, but I just want to just really hit on some really cool things you can do with your data.

Jason Reilly: Yeah. Alright. So you have a survey, it’s set up. You have everything that you need to have good questions.

You’re getting engagement, people are actually opening the emails, they’re responding to you. Great. What’s the first thing you do? You use Medallia Experience Cloud. There’s a lot of built-in dashboards and tools that exist in the platform that can help you understand what your responses look like. One critical lesson, after you build your survey, Tami said, let it like, don’t just change everything because you’re not seeing something that you’re looking for.

[00:32:00] She’s right after you build it. You don’t even want to do an analysis right away. You need to wait to get time. Rough rule of thumb, back of the napkin, 90 days. Let your survey run. You have to see if people are responding to it. You have to see what the trends look like before you can start analyzing it and seeing what’s going on.

While you’re doing that though, you can monitor things in Medallia Experience Cloud. You can look at stuff like trend lines, response rates, averages, so on and so forth. Now, if you are putting. Like trend lines in place. You’re putting any kind of pivot tables in place, things like that. Make sure you’re adding the significance testing to it.

It’s basic T-test. Set them for the 95% satisfaction. If you don’t know how to do that, your servicing team can absolutely help you, but make sure you’re getting these things set up because these are gonna give you useful signal. Our changes happening. Great. Are those changes Significant, even better to know.

Another thing too. Make sure you’re looking at how response rate comes in. This is an excellent little chart. One of the things that can show you is what we were just talking [00:33:00] about over there when we see this kind of red line. All very close together. What this is telling me is that these questions are all probably being answered in a straight line.

So somebody answers a three on one, they answer a three on the next, and they answer a three on the next over and over again. So when you see that these lines are super tight, you’re not seeing a lot of variants, that’s another warning sign for you. Thinking about these things and what these signals are absolutely actually telling you is critical to helping you think, like someone like me is gonna look at when I take your data, pull it down and start looking at it right?

Another thing to look for distributions. You can build these in Medallia Experience Cloud. This was just something that I was building for a class, so I grabbed it and put it in here. But one of the things I want you to know is that nothing in here is wrong. Another thing I get a lot of is everybody’s only answering a five.

Everybody’s only answering a four and five or whatever, like right-leaning or extreme leans. These aren’t necessarily wrong. Remember, we’re CX oriented. We’re [00:34:00] not trying to ask these questions for a senior vice president or a C-Suite executive. That’s not why we’re asking these questions. We’re asking these questions to understand what the customers think.

So we don’t look at this and say, this is a bad question. We look at this and we say, our customers are telling us. Conclusively that they’re very satisfied. With this, we can now take this question off our survey. We can focus on something else. We can use this as an opportunity to experiment with other aspects of the customer experience.

If we see a distribution like this, we don’t say, this is a bad question. It can’t be included in the survey. What I see from this is really, this is a bimodal question. Yes or no, I’m satisfied. I’m not satisfied. It’s too far of a lean Now. Normal distributions are great. Normal distributions are also very rare.

Do not be concerned if you don’t have though. Alright. Another thing too, is that you can look at scores over time. [00:35:00] This is also a great example of why we care about Signal. So you can see here’s all these questions. They’re all highly correlated. The lines all go in the same general direction. When we look at this, we would say, okay, we have an issue with the questions that we’re asking.

We’re not seeing a lot of differentiation here. We’re not seeing a lot of stuff that matters. More importantly, however, OAT is at the bottom. Why is OAT so low? If everything else is so much higher, we dig into this. And we find out that the reason that OAT is lower is because we forgot to ask an important question.

We missed a signal. That’s why it was lower. There was a known unknown and that this allowed us to find it. This is where you start to get into where I tend to be happiest, where I’m pulling data down and I’m starting to look at correlations. So one of the things that we are trying to understand here is how are the variables interacting with each other?

It came up earlier in a comment when we have highly correlated questions because they’re all driver questions and they’re all super correlated with each other. [00:36:00] Then it. Isn’t something that we can put into a regression model of some kind, whether it’s a GLM or a standard linear regression. We can’t really use it ’cause it breaks the underlying assumptions that the model needs.

Variable five and variable seven, for example, are 0.9. They’re basically being answered. Identically in the same pattern as one goes up the other absolutely will go up. As one goes down the other absolutely will go down. We’re not getting useful signal there, so we need to look at this or someone like me on your staff or here at Medallia, we’ll look at this and we’ll say, look, these are questions that we can eliminate.

In this case, we ended up getting rid of variable seven and we replaced it with variable five because variable five was the leading indicator. The relationship made more sense. Our final goal, someone like me at your organization, a data scientist or senior analyst, the final goal that we want to be able to get you to is to be able to say, here’s your performance and here’s what matters to the customers.

Here’s what you need to focus on. We [00:37:00] wanna be able to tell you that your important opportunity, the estimated coefficient, means that it’s important to your customers. The mean performance means that you’re not doing as well at it as you are other metrics. We want you to focus on that. We want that to be something that you action on.

That way you can take your survey and you can drive it to where it needs to be. Now, here’s the cool thing. Every question you design, every question you put into your survey, I’m gonna tell you right now, you’re not wrong on any of them. Even these questions down here that are less important strengths, you’re not wrong about them when you include a survey.

Question. I tell you to include it with intent. What is something that you need to understand in relationship to a critical metric, whether it’s oat, NPS, whatever. When you see something like that, you might say, oh, I thought it was critical, but it’s this less important strength. I obviously screwed up. No, that’s not the language you use.

You say you have successfully proven the null hypothesis. You have [00:38:00] successfully shown that this metric isn’t important. Look at you. Saving time, energy, and effort for the organization. You are an efficiency driver. You are proud of that. You are. That’s the best part about like seriously, it’s why I love this job.

I’m never wrong. I’m either accepting my hypothesis or I’m accepting my null hypothesis. I love it. My wife, not so much. Okay, finally, text analytics, unstructured data. Hopefully more than just text. Hopefully you’re pulling in a lot of other signal, but when you start to find out which questions you’re asking are showing the strongest relationship to the core metrics that you care about, then text analytics allows you to deep dive text analytics allows you to find the questions that you don’t even know you need to be asking.

So that when you realize, yep, I figured out what’s going on with this question. Performance is good. It’s no longer related to my key driver ’cause we’re doing so well at it. Not a problem. I’m gonna pull that out. I’m gonna get rid of it. I’m gonna replace it with pricing because pricing, we’re not doing so well at that.

This has a negative [00:39:00] impact. When we look at TA and I don’t have a question about that in my survey, I need to understand what about it. I need to start quantifying it. And so I’m gonna create a question. I’m gonna put it in there. Alright, so if you want to learn more, please feel free to book a Meet the Expert session with me or Tami.

Also, as a note, just wanna call this out. At this stage we have little cue cards right here on the tables. We are looking for surveys to assess the class. Did you find it useful? Interesting. Are you gonna action on it? Please make sure you’re taking that. We want to invite that out and I will turn it over to you.

Tami Salmi: Yeah, we have 17 seconds left, so that means,

Jason Reilly: sorry guys. I wanted to leave more time.

Tami Salmi: We didn’t leave enough time for questions at the end, so again, please just feel free to book the Meet the Expert Sessions. We’re happy to continue these conversations. And as you’re heading out just remember that we are going back to the main stage, so we are headed back up to see Will.

So as you leave, take a screenshot or take a picture of that QR code and then we’ll see you upstairs.

Jason Reilly: Thank you [00:40:00] everybody.