Mikal Reagan: [00:00:00] Good afternoon everyone. Welcome to the masterclass Text Analytics 1 0 1. I am one of your presenters today. My name is Mikal Reagan. I’m a member of our CX advisory team. I’ve been with Medallia about five years, but before joining Medallia, I was a practitioner like many of you guys, and we leveraged text analytics quite a bit.

I worked for a company called Volvo Trucks with me today presenting. Is Lulu.

Lulu Tai: Hi everyone. I’m so excited to be here to talk to you about my, one of my favorite topics, text analytics. I’m Lulu Tai. I am a TA, SME here at Medallia, and for the past four years I’ve been working closely with our clients to build strong TA programs.

So let’s dive in over to you, Mikal. And

Mikal Reagan: as you guys can tell, there’s clearly an average height requirement. That’s why Lulu and I got partnered together. Alright, so before we jump in. Quick show of hands, who’s running CX programs today? I imagine the majority of us here who’s running an employee experience program, [00:01:00] not as many, but a few.

I think the key takeaway is that everything you’re gonna learn today can obviously be applied to your CX programs. But also to your employee experience programs. So what we hope you to cover today, you walk outta here with is really understanding kind of the foundational elements of how Medallia’s text analytics works, how it can be applied across a variety of different signals, not just surveys, what you heard from the keynote contact center transcripts, call logs, ratings, and reviews.

Any unstructured data can be fed into the Medallia platform, and we can analyze it and help to prioritize what are some of the areas that are impacting that customer or employee experience. So you’ll learn a little bit. We’ll take a brief demonstration to walk you through a high level. Remember, this is a 1 0 1 class.

We do have a 2 0 1 class tomorrow. So if as you learn more and you’re interested, please attend that class. And ultimately you want to be able to be to tell stories. The [00:02:00] data tells you some things, but you have to build those stories out and provide some context around that customer or employee experience leveraging text analytics.

And of course, we’ll end with a q and a session. So please hold all questions until then. All right. Before we get started, very base level, what is text analytics? We’ve talked a lot about structured feedback. Many of us have these metrics, these questions with predefined answers in our surveys today.

Text analytics does not apply on those structured feedback, text analytics is run through the unstructured feedback. Again, all those additional signals that your customers or employees are leaving you, you wanna bring that in, analyze it, social media post. Heard me say call transcripts, case notes, chat logs, things of that nature.

We even have some of our clients utilizing text analytics on their case management notes, so when they’re closing the loop or their front lines are closing the loop, they can analyze and start to understand what are some of the key topics and [00:03:00] themes there that helps fuel that outer loop innovation. Of course there are some popular misconceptions about when you install ta, I’ll never have to read the comments again.

You need that contextual nature to humanize and understand exactly what those customers or employees are talking about. Helps you sort through the noise. Thousands upon thousands of records are coming in. You can’t spend the time to review all that, so this gives you some of that directional guidance to get down to what those key areas of focus should be.

A couple other misconceptions is not to set it and forget it. You don’t turn it on and not nurture it Throughout your program, you want to, and Lulu’s gonna talk a little bit about how you continue to evolve and optimize your text analytics because there are changes. You have new products going to market, new processes in place.

So on and so forth. So you have to continue to nurture the text analytics programs that you have. And we hear this a lot too. It is not a [00:04:00] hundred percent accurate. My TA is not accurate. A hundred percent can’t be. You’re compromising what we call coverage, right? The ability of us to tag those comments in a variety of different ways that are coming in from those streams.

If it was a hundred percent accurate, you’d be losing out on that coverage. Lulu’s gonna talk a little bit more, but a baseline is probably around 80%. That you want to think about from coverage and accuracy. So now that we got that behind us a little bit, let’s talk about how Medallia’s Native text analytics works.

First step is verbatim parsing. We parse it down at a phrase level because there could be multiple topics within a certain phrase. It is not just, I had a great time at the win. But also the front staff was not very helpful. Those are two separate topics, so we wanna parse that down and really get down at that granular level.

From there, we tag those comments and we categorize them based on your topic hierarchy or taxonomy that you have [00:05:00] within your platform, and we apply sentiment. To it as well. How do this customer feel about this particular topic? Were they strongly positive? Were they neutral? They have a really bad experience, and that comes out in that sentiment analysis.

Many years ago when we were getting into the text analytics, we focused on natural language processing, which I’m sure many of you guys know, but we’ve evolved to natural language understanding, and the benefit of that is now you can start to really incorporate the emotion, the level of effort. That your customers may or may not be conveying directly.

We can analyze that and start to determine things like they are frustrated, they are angry, they are potential risk of churn, leveraging text analytics in our natural language, understanding. And then finally, insights are great, but what do we need to do with them? We have to act on them. And there’s a variety of different ways that we can route this information to those key stakeholders that are gonna take action.

On those feedback, on [00:06:00] that insights, you can also create trigger alerts based on keywords. A lot of our regulatory clients, such as finance even when you have to maintain compliance in the auto industry, we have specific topics that allow that to be routed to specific team members so that they can maintain that regulatory compliance.

You heard me talk about topics and themes, just a quick breakdown. There won’t be a quiz on this, but if there is. You’ll pass it pretty easily. Topics are human generated. They are lists. We create those. We have many topic starter sets that are in your instances today. However, themes, the way I refer to it, they’re machine generated.

It’s that unsupervised machine learnings. It’s the blind spots. It’s what we don’t know, it’s what’s not getting tagged to the topics. So themes help us to identify what are some of those trends that might be coming out. As we evolve our TA program, we might convert, see some of those themes and start to build out some topics so that we can tag [00:07:00] those accordingly.

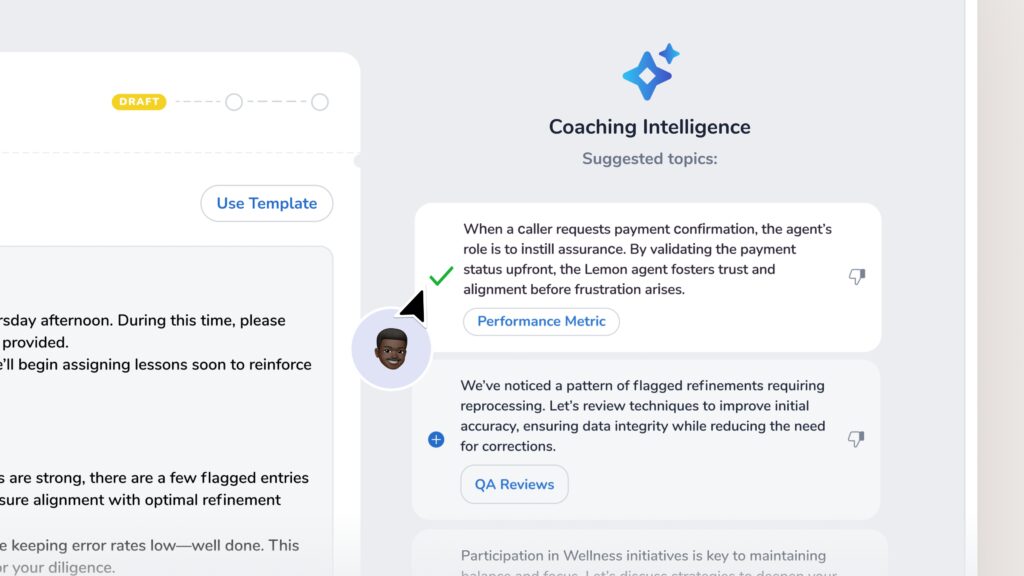

Within that. Another feature that we have is, what you might have heard is action intelligence, and this is really around that natural language understanding. We can start to flag records that are requiring some attention from that customer, whether they are at a risk of churn or they just need, maybe need a little bit more white glove service or handholding.

Through their experience, customer effort. I don’t know who’s got a customer effort score today that they measure. Julie does several other people, right? Which is great ’cause we deliberately ask that question, how easy is it to do business? How easy was this transaction? But through the verbatims, we can start to suss out and assess the level of effort that’s being required by your customers.

More frustration, the emotion, high level of effort, so on and so forth. It’s probably not a good avenue for retention. We can tap into suggested actions and recognition as well, suggested actions. Why not hear from your customers on the improvement activities, processes, so on and so forth that they could implement.

You can tag that from [00:08:00] suggested actions and then recognition. We need to reinforce positive behavior. Call out those individuals that have delivered a fantastic service. I had an issue with my laptop just a few minutes ago, and the IT guy was quick and resolved it. I called him out by name. His leader’s gonna see that and be able to reinforce that positive behavior as well.

Couple key notes. Impact score. Raise your hand if you fully understand what impact score means. Good. We’ve got some good because it’s always hard. I used to be in sales and trying to sell people on the idea of what impact score was could get convoluted. It’s very simple. It’s the top level kind of scoring metric that text analytics looks at.

And it can be attached typically to NPS. It could be attached to overall satisfaction, but ultimately what impact score is saying is when people are talking about a specific topic, it’s either gonna bring the score up or it’s gonna bring the score down. If we were to remove everybody from [00:09:00] talking about that particular topic, I have noisy breaks, for example, then we’ll start to see that score go up.

So if it’s a negative impact score, it’s bringing down your overall metric. If it’s a positive impact score, it’s what’s boosting that metric up. We wanna reinforce that, that behavior. Oftentimes though, with some of the signals that we bring in, we don’t have a score attached to it. We don’t have an NPS, we don’t have a CSAT or CES.

What we have is just open verbatim. We can apply a net sentiment score to that so that we can measure the overall sentiment related to that entire comment. In the example that you see here, there’s a variety of different statements in there. There’s two positive, one neutral, and two negative. So the net sentiment score, similar to how we calculate net promoter score, you take the percentage of positive, subtract the percentage of negative, and you arrive at a net sentiment score of zero.

So there’s a lot of power in understanding these two metrics, especially as you work with your [00:10:00] teams to build out whatever those continuous improvement activities are. But more importantly, to prioritize what is having the biggest impact on the experiences that your brands are providing. Alright. Now we’re gonna jump into a brief demo in this demo environment.

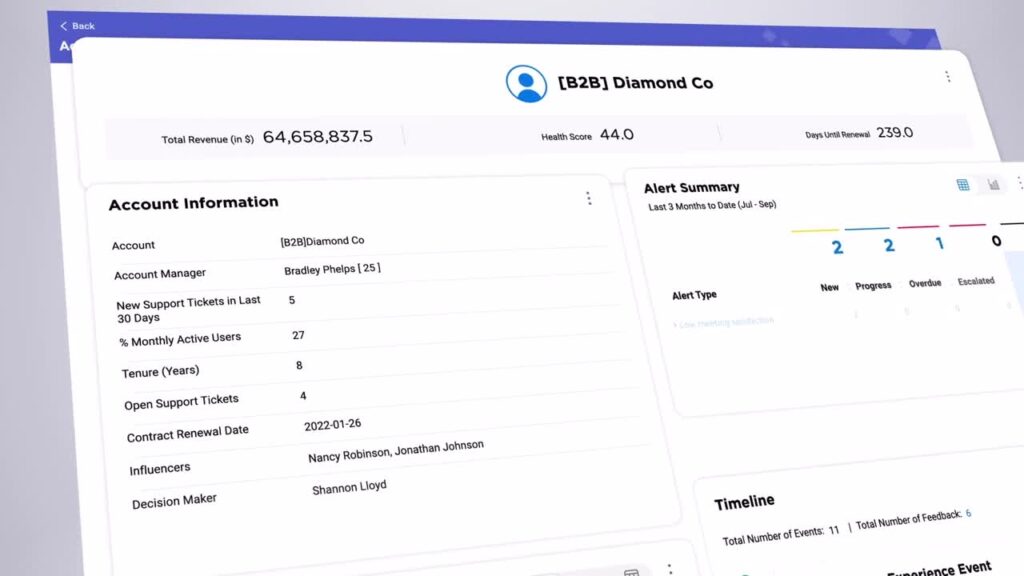

My name is Jack. I am an insights member for a large bank. And I’ve been hearing from our contact center that the volume of calls are going up significantly. Our metrics, KPIs in the contact center are going down, and I’ve been asked to launch an investigation. So I can go in here and I can start to sort through, and many of you guys are probably familiar with these types of modules.

I can see what are some of the trending topics that are coming in, in terms of their volume increase or decrease, as well as the related sentiment. Mobile app credit card in this example has a significant volume change from the last period, and sentiment trend is going down. A lot of people are frustrated about that.

That allows me then to [00:11:00] coach my agents to prepare them for some of these calls that could be coming into their. Contact center, help them with their talk track, understand, and hopefully start to drive more. First contact resolution, reduce average, hold time, handle time, things of that nature. Now that I’m a little bit better prepared for, what are some of the key trending topics that are coming in?

The other aspect that I’m looking at is I’m seeing under our emotions that frustration accounts for nearly 7% of all the volume that we have coming in. Frustration doesn’t tell you a lot, but if I click on that, what I can see now is this little side panel that pops up. And I can start to review some of the details behind that particular topic.

And what I can see is main reason people are calling in is because they’re having a lot of frustration around making payments. Can’t be easy for them obviously driving a lot of expense to the contact center. So how do we address that? I’m gonna continue this investigation a little bit, but at a surface level, I now know some of the main reasons they’re contacting [00:12:00] our agents and what the related sentiment is tied to that.

So I go into the text analytics tab here. This is now going to be inclusive. Of all the signals, all the interactions in the contact center, not just surveys, not just calls. This is the holistic view of all the engagements that our customers are having with our contact center. And at the high level, I can see the summary of topics and website right now has the biggest negative.

Impact score of 8.7. So what that means is, again, when they are talking about the website is bringing down that top level metric by almost nine points, your NPS is suffering because of website issues. But again, website is pretty generic. That’s a level one. So that’s a parent topic. If you go down to a level two topic and I click that little carrot, you’re gonna start to see more granular detail around what is it about the website that’s causing a lot of challenges logging in, log out, password registration, my inability [00:13:00] to be able to serve myself by logging into the website.

Now, there could be a correlation to making payments, right? I already know that there’s a lot of frustration coming into the contact center around making payments. Maybe there’s some correlation there. I’m gonna drill down into this. It’s gonna take me to the next subtab up here. Topic Deep dive gives me some high level metrics around what is the NPS related to when people are talking about this.

What’s the overall net sentiments? I can see volume coming in around the different channels where we’re engaging. This is the segment. Ranker is one of the things that I use quite often. I had a responsibility for entire dealer network in the us. I’d hear these issues coming in through ta. I didn’t know where to look.

Segment Ranker allowed me to drill down by particular regions to see if it was prevalent in one region, even down to the dealership rooftop level. So I was able to go in and really start to understand who’s having the biggest trouble with this particular topic. So if I look at it here I’m just gonna switch to supervisor and I can start to see that [00:14:00] Aaron Key, poor Aaron, when he’s getting these calls come in, he’s not performing well.

With his team in terms of resolving whatever those website issues are. Realize it might not fall on Aaron because he is contact center rep, but he can start to build out a cross-functional tiger team, if you will, with the digital teams and start to build that case together. So now we’re both solving, we’re breaking down the silos and we’re working together to solve issues that are affecting both digital and the contact center.

And then one last thing before I turn it over to Lilu. The benefit of selecting that topic allows me to narrow my focus now down to 700 different interactions versus the thousands upon thousands that I started with. These are gonna tag those particular comments that relate to that topic of website login issue.

I can filter down by particular sentiments if I want to as I’m building this case. It’s always great to incorporate some verbatims in there. To make them provide that context and make your [00:15:00] stakeholders really understand the issues. And I can also sort by some of the things like most actionable if I wanted to, if there are some good actions or suggestions from my customers coming in that could relate to updating my password or that particular topic that I have.

So that was just a quick high level overview text analytics 2 0 1. We’ll be taking a deeper dive into some of this, so I encourage you to do that, but I wanted to make sure, Lulu and I wanted to make sure you guys had a good foundation of what text analytics is in the Medallia platform. Lulu,

Lulu Tai: thanks Mikal.

Okay, we’re gonna switch gears a little bit. Mikal talked to you about what is ta, how does TA work? I’m Medallia and I’m going to walk you through a little bit about TA program management. We’re gonna talk about the text analytics journey where everybody could be at a different part of the journey and that’s okay.

Mikal already referred to it multipliers earlier. A common misconception is that TA can be set up and it’s just gonna run perfectly. That is definitely not the [00:16:00] case. Your program will evolve as your business, your customer needs, and your technology change. So whether you’re just getting started or refining a mature TA program, the key is to keep iterating, learning, and optimizing the drive real impact.

As we go through session, I’m going to walk you through the steps of a TA journey. I want you to think about where you or your organization is in this text, Alex journey, and how can we move it to that next level. We typically see organizations somewhere in between any of these four key stages. So let’s walk through these stages and talk about what building a strong TA program could look like and the different steps of the journey.

Who in the room thinks they’re here?

Awesome. So at this stage, you are looking to set up your textx program. You’re figuring out what’s needed to get started, or you had a TEXTX program and you are working to get it set up in Medallia. The goal is to make sure that we get the fundamentals right so that your program has a strong foundation and can scale effectively.

[00:17:00] Things we wanna be thinking about at this stage. How do I set up my TA program accurately? What data sources can I include? Definitely not just surveys. We’ve been hearing that all day this morning as well as Mikal just mentioned. We’ll talk a little bit more about data sources later as well. What’s the best way to structure our topics and what kind of tea reporting do I need?

Now, most of the solutions we’re gonna be using in this kind of new beginner stage is what Medallia calls our best practice packages. Like the one that Mikal just walked through in our demo earlier. These are out of the box solutions that give us a starting point for topics and reporting.

So after your TA program is live, the next step is all about refining it and scaling it to providing meaningful insights across your organization. Who in the room thinks they’re at this stage? Great. So usually at this stage we’re looking at topic customization. You’re starting to refine your topics based on the feedback that you’re getting, but TA usage is often a little bit more ad hoc and reactive.

Adoption of TA is maybe a little bit [00:18:00] low. TA’s not fully integrated into your organization’s reporting workflows or insights workflows outside of the original implementation team or your core team. At this stage, we can also be thinking about, okay, how do we ensure that more teams in our organization are using TA and using TA effectively?

How do we move from reactive insights to proactive analysis? Now, if you’re levelly up and you’re in this optimized stage. You are shifting from passive reporting to proactive insight generation. Usually at this stage teams are beginning to use advanced topic customization and the advanced reporting enhancements.

There could be a high degree of admin suite or what we call selfs surface usage to manage your topics. Although that’s not required, your TA is proactively maintained rather than being updated only when issues arise and your usage of TA is expanding across your organization, not just within siloed teams.

Who in the room is here? Nice. Great. So if you are in this stage and you’re thinking [00:19:00] about the next step, you wanna be thinking about how do we customize TA further to fit our needs? And are we making full use of our TA capabilities and are there additional data signals that we could be adding into Medallia now advanced?

At this level, TA is fully optimized. It’s no longer just a reporting tool. It’s a core part of your business strategy. Who in the room thinks they’re here? Okay, we’ll get there. So at this stage, TA is used strategically, right? Insights from Tier are actually informing your business decisions, anywhere from product development, staff training, service enhancements.

Ultimately, TA usage is embedded throughout your entire organization. If you’re already at this stage, then you might be thinking about what’s next in our roadmap for TA innovation? To that, we’ll say stay in touch with your servicing team. Stay up to date on our latest offerings or partner with Medallia on our early adopter programs or beta programs so you can influence our product [00:20:00] development every organization’s at a different stage in your TA journey.

And that’s completely okay. The key that we want you to take away is that you wanna be keep evolving from implementation to managing, to optimizing. Eventually we wanna be transforming how your company leverages unstructured data. As we go through the rest of the session, let’s think about how can we build a strong TA program that does that keeps evolving to meet your organization’s needs.

We’ve broken it down to five key pillars, and these five key pillars are relevant to you regardless of which stage you’re at. Now, they might look very different depending on the stage you’re in, but we’ll talk about those one by one. So most of us in the room probably started with surveys, or some of you might have included additional data sources during implementation.

While surveys are a great start, we’ve heard it multiple times today, consider expanding to other data sources so you get that more complete. View or omnichannel view of your customers or employees experiences, especially as our programs mature. [00:21:00] We really wanna be thinking about that. So Mikal already alluded to it earlier, but some of the data sources that we can think about, including inter Medallia or digital speech, your contact center call chat transcripts, even your mobile or online app reviews, right?

Those are really good case notes complaints that you get from your customers as well. Language processing is also key whether you’re using native language processing or translations. We wanna make sure that your program is designed to capture and analyze feedback in the languages that your organization and your audiences are using.

Topics. We’re gonna spend a lot of time talking about topics today because that’s. What we can control, right? Mikal talked about how topics are human made, whereas themes are leveraging machine learning. So we’ll talk a little bit more about program management when it comes to topics. Once we have our data all into Medallia, we wanna make sure that we’re organizing it into actionable topics, meaning they need to be relevant, they need to be detailed, and they need to be operational so they’re aligning to our [00:22:00] business objectives.

Mikal already talked about our starter topics. So we have more than 15,000 topics to get you started, ready to use right away. Those topics, we have coverage in more than 17 languages. And over 15 industry verticals such as automotive, hospitality, retail, financial services. We also have cross industry topic sets, profanity, emotion, a contact center topic sets natural disaster and events we’re constantly adding to our topic library, rolling out new topics sets based on current events so we can enable our clients to listen, react, and respond very quickly in the emerging situations.

Now as your program expands, you can build out a full topic hierarchy or you can install additional topic sets as you expand into new programs or add additional data sources. When it comes to building out a topic hierarchy, a lot of the times our clients ask us I. What do I need to rely on Medallia to do versus what can I take on?

[00:23:00] And our response is always, there’s no right or wrong answer. We can actually meet you where you are, right? Medallia can offer you full service solutions for building out your ta but you also have the option to do what we call self-service and learn to build topics yourself and learn to maintain your topic hierarchy yourself.

You can also take a hybrid approach, right? Split that work between Medallia and yourself and focus on what your organization can take on. So the other thing that we wanna make sure that we’re doing is topic optimization and ongoing maintenance. When we have your topics ready, you wanna be thinking about the accuracy and coverage that Mikal talked about earlier.

What does accuracy mean? Are my topics capturing insights accurately? And am I getting good coverage? Are most of my verbatims being tagged by at least one topic? The next question we usually get asked is, then how often do I need to be checking my accuracy and my coverage? Usually we’ll say when your program is rapidly expanding, we recommend to check it quarterly, [00:24:00] but as your program stabilize, I like that nod.

Make sure you yeah. Quarterly is what we recommend, but as your program stabilizes, once or twice every year is probably good enough. Now, that being said, there are gonna be situations where you need to make ad hoc updates. Company events happen, product launches industry shifts, emerging events, those are all reasons where you might wanna take another look at your TA health and make sure that everything’s okay there.

Having great text analytics topics is one thing. Making sure that they drive action is another. So we wanna make sure we have a strong TA reporting strategy where the right people see the right insights at the right time in a format that helps ’em make informed decisions very quickly. When thinking about reporting, we wanna make sure the dashboards are aligning to our organization goals and what we use to be making decisions on the reports should provide clear, actionable insights rather than just.

Data points and numbers. We wanna make sure TA reporting is integrated into our organization’s reporting workflows Too often do we see [00:25:00] organizations really focus on the score. Did it go up this month? Did it go down this month? But perhaps not paying enough attention to the why behind the scores using our text analytics.

Mike already talked about this a little bit earlier, but Medallia reporting lets you enable real-time notifications for emerging issues that require immediate attention. So like those topic based alerts, right? Many of our banking clients are leveraging them in their complaints program so that they can alert it.

Every time a customer makes a complaint, other clients are relying these real-time alerts to be notified about bugs. Outages staff’s unprofessional behavior or even threats as your TA program matures, you may also need to expand into more purpose-built or role-based reporting to fit the specific needs of other teams such as your executives or your front line.

We know that executives don’t wanna see all the things that we see. What, when we’re on an insights team and our frontline don’t have time to be scrolling through dashboards day in and day out, they gotta get to their jobs. And so if you wanna get deeper into advanced reporting [00:26:00] strategies, be sure to check out Text Alex 2 0 1 tomorrow afternoon.

We, Laura and Rachel are gonna walk you through these specific use cases on how you can build out role-based dashboards. Work’s not done. We’ve built a strong TA program. What else do we need to do? We need to make sure that ongoing adoption is there. We recommend to track TA engagement over time and find out where users are needing support.

Medallia offers Healthwatch dashboards to help you track engagement. We recently had a large retail client where we launched new dashboards to their front line and we collected ongoing feedback to ensure that their dashboards are actually working for them, providing value. We also recently helped a large bank leverage Health Watch identify in their large reporting library.

What reports could we maybe sunset or should we think about revamping because we’re not getting a lot of usage there. We also recommend that you share best practices internally, either through Medallia or self-paced learning. Encourage your different teams to incorporate text analytics into [00:27:00] their workflows.

You can engage your Modela team to organize what we call insights workflow workshops, where we help you or your stakeholders learn how to fish insights through your TA reporting, so that way you’re not always being bothered by your stakeholders to feed those insights to them. You’re enabling them to get those insights real time themselves.

So we already talked about how TAs in the set it and forget IT program, a well-governed TA program, makes sure that your topics are relevant. We talked about how reporting needs to be impactful. We wanna make sure our teams are continuing to find value in the insights. In addition to tracking the health of your ta, a lot of the times we get questions from our maybe core th champion at the organization on, I’m getting a lot of feedback or requests for topics.

How should I handle those? So this is where we recommend that you work with your Medallia team to define a process that works for your organization. So imagine what a topic approval process could look like, and then how, who carries out the execution to build those topics and build those reporting.

Last, not least, let’s stay [00:28:00] connected and continue evolving, right? Keep an eye on Medallia’s latest offerings. New features are constantly being released. We heard a lot about the Gen AI products earlier this morning. But for text analytics we also have features like phase level filtering where it allows you to filter text space on the specific phases within a response.

And it really helps you make insights more precise and more actionable. And so for a deeper dive into phrase level filtering, I’m gonna make another plug. Again, make sure you check out the text analytics 2 0 1 masterclass tomorrow. ’cause they’re definitely gonna be covering that. All right, Mikal, we went through a lot today.

Mikal Reagan: It was a lot. Hopefully Go ahead to the next slide. Yeah, if you, it’s gotta build, I believe. Oh, it’s

Lulu Tai: gotta build fancy. Hopefully

Mikal Reagan: you’re gonna walk away with a foundational understanding of text analytics how it can provide insights, how you can shape those stories to build out those cases with your stakeholders to get that action to take place to reduce some of those friction or pain points from your customers.

Or your employees. It allows you to prioritize those key [00:29:00] areas of investment by understanding does this have a significant impact or is this just noise? And then Lulu gave us quite the kind of plan on how to evolve your text analytics program. So just remember, like anything and needs a little love, a little tender care needs to continue to be nurtured so that you can get the most out of it.

And there’s also a raise your reporting game to where you can fully understand the entirety of the reporting platform. In addition to text analytics, understanding the metrics, the other analytics that live within the Medallia platform, we do have other resources at the hub. Go in, take a test drive if you need to.

Of course there’s some self-service enablement in our navigator to learn more about this. But more importantly, I think if you’re ready to make some moves, start to engage conversations with your professional services team, they’ll reach out to experts like Lulu and the kind of expert like me to come help you guys evolve your program.

And of course, who’s familiar with mug? Here we got some mug lovers. Whoa. Nice. Oh, there we go. There it [00:30:00] is. We do have a TA mug. The next one’s April 17th at 1:00 PM This entire deck will be available to you. We’ll leave up the QR code if you guys want to go ahead and register for it while you’re here.

So hopefully that was helpful for you guys. But now we want to open it up for any questions or I’ll even. Pardon me.

Lulu Tai: Compliments.

Mikal Reagan: Compliments. Or if anybody has a great story about their own use of text analytics within their employee experience or customer experience programs the doors will remain locked for the next 10 minutes, so you can’t leave.

Any questions? Thoughts? Yes, ma’am. Thanks to both of you for the interesting session. We have implemented text analytics in our instance. We are a B2B company. But when you mention about the optimization of the topics, right? If I optimize, like initially we had 50 topics, now we have 30 topics.

Q+A: Won’t the tagging of the previous topics get lost When you start optimizing the topics, when you go [00:31:00] forward? When I, you want, when it starts take, going, sharing a story of a customer for the past three years, if I change the topics, how it is going to impact my instance.

Lulu Tai: I got you. So you’re asking, I already have a base of topics to start.

If I add more, how is that gonna change what my tagging performance will affect

Q+A: if I reduce the topics? If

Lulu Tai: you reduce the topics? Okay. That’s a great question. Generally you might see it go down, but it really depends on the type topics that you have there. If you’re reducing topics that aren’t really beneficial for you or aren’t.

Performing really well, not really being bringing any volume, then that’s what we would call like actually a good cleanup. In that case, I would be more focused on what’s the value that your topics are bringing, and if they are bringing value, keep them. If they’re not bringing value, then for sure, spring cleaning, let’s throw them out.

Mikal Reagan: Is there any historical record of those? Of that analysis after she eliminates or reduces the topics, you

Lulu Tai: have the option to choose that. So you, when you are removing topics, you can say, do I wanna erase it from [00:32:00] all of history? I never wanna see these topics ever again. Or do I wanna retain the history?

But I don’t wanna see those topics going forward.

Q+A: Yeah, I want to retain the history. But for example, if a topic is resource, right? Resource, hiring, resource, talent, everything will be there under resource. Yeah. So if I’m taking off three topics out of the five. How do I put, pull out the history?

Won’t that previous data just get lost?

Lulu Tai: No. So if you choose to retain that, you’re just deleting the topics for going forward. The historical comments that have the historical data, those will stay unless you choose to remove them. Okay.

Q+A (2): Okay. Thank you. You know how you went with the first slide, how you pass the sentence and something is positive and negative.

Sometimes we ask a question like, how can we help? And so the comment starts with, you can help with A, B, C, and that help actually tags it to the negative sentiment because [00:33:00] but now I’m like, I lost the trust from the stakeholders. You’re asking this question, it’s negative. And so we have to rebuild the sentiment.

So question is, that’s one thing that we felt that was comment and question is can these sentiments be different by the question? Which means help should be a negative sentiment for NPS comment. But when we are asking for help, it should be like a gray or.

Mikal Reagan: I’m gonna let you take that one, but I will mention that we do have sentiment override in the platform.

We didn’t talk about that. That doesn’t necessarily, so like in that instance where help was deemed negative, but in reality it should have been a positive or at least a neutral experience. You can go in as a user with the right admin rights, obviously, and do a sentiment override on that. Now, challenge with that, and keep me honest here, lilu is I don’t think that trains the model.

So what we could do is export that, but I’m guessing Lulu’s gonna say that we have [00:34:00] to go in and look at those comments and manually. Adjust the accuracy of this.

Lulu Tai: Yeah, so you can do sentiment overrid, but even before then, we actually have a setting. This I would recommend you reach out to your Medallia team for, because I don’t think this is something that you can do yourself, but you do have the option to tell the system, Hey, these.

How can I improve? Questions are naturally going to be negatively directed, right? And so you can put those in as a setting so that the system takes that into consideration knowing that these are the questions that are generally gonna be ne more negatively geared.

Q+A (2): So can that be done by questions like,

Lulu Tai: yes.

Q+A (2): Okay.

Lulu Tai: Yes.

Q+A (2): By question,

Lulu Tai: yes, we can do it for

Q+A (3): positive and negative. We are using text analytics in both our relationship feedback program and transactional as well on the web experience. And we were using up until now, the same code frame for both the line of business and the strategy changed a little bit.

So we have improved the code frame for relationship [00:35:00] feedback. And when we’re done testing it, I would like to. Do some improvements so that we can reuse it for digital, but adding all of the nuances of the web experience, like actions and behaviors, downloading something, uploading something, logging in, clicking, all of those things.

Do you have any best practice on how to tackle it? You gotta follow,

Mikal Reagan: so to rephrase. You’ve got a baseline of TA for the relationship. You want to duplicate that, but then enhance it with all the nuances of digital behaviors experience there.

Q+A (3): Correct. We don’t want something completely different because the idea is that you can compliment one with the other.

Mikal Reagan: This tag pools, is this where? I think so. Yeah. Yeah. Yeah. So I think you’re asking is how can I merge it together? How can I leverage what I already have but be on top of it? This is getting a little bit tactical, but we usually call this like topic set design, right? So if I already have an existing topic set, or you call it code frame, definitely you wanna leverage that.

Lulu Tai: On the new feedback that you’re getting in. And then we do what we call like an untag analysis. So review what are the comments that are [00:36:00] not being tagged by your existing topic set and look through those comments and say, Hey, what are these new topics that I need to consider that I can add? Because people are talking about it.

And so this untag analysis is very popular with a lot of our Medallia clients. That’s certainly something that I would encourage you to reach out to your Medallia. Servicing to help you set up. You can do it by yourself. You can get Medallia to do it, but that’s going to help you inform you on what new topics do I actually need.

I’m not flying in blind. You can also reach out to Medallia about, Hey, digital, right? You talked about digital experience. We definitely have a digital experience starter topic set to get you started. You can also leverage your themes, run themes on that new feedback and let themes tell you what new topics you need to build.

Mikal Reagan: Cool.

Lulu Tai: Thank you.

Mikal Reagan: The short answer is yes, you can.

Lulu Tai: Thank

Mikal Reagan: you. Yeah, so we have a client automotive file that’s got a pretty omnichannel holistic view from digital contact center, obviously sales and service roadside, and they had individual to topic sets for each of those kind of touch points. They’re [00:37:00] missing out a lot because I might be calling into the contact center about a digital experience and we weren’t tagging those.

So what we did was merged all those tag pools together and then created one holistic topic set that’s now able to apply irrespective of the channel.

Q+A (4): Thanks so much for the session. We are with Adobe and we are definitely in the maturity stage, intermediate. Yeah, where we have a topic model set up, but we’ve been doing a lot of assessments and we’re just wondering what, are there any capabilities to optimize your text analytics in the platform that could help us.

And also what challenges have you seen with TA and, what, where are you looking to evolve what you can do with TA in the product in the future?

Lulu Tai: Great question. Do you wanna take any of that?

Mikal Reagan: I’ll take the second part, but have you been into, have you been into the self-service? It’s like

Q+A (4): tuning.

Yeah. Like you can go in and tune like the sentiment. Yeah. There’s work groups.

Mikal Reagan: Yeah. And you’ve seen all that and how you can leverage that.

Q+A (4): Yes. And we little bit, we open it [00:38:00] up to some change agents Yeah. To do some tuning as well. Okay. But there’s just a lot that we need to tune. So

Mikal Reagan: let me jump in with my perspective on the second part, the challenges.

I think what Oody shared a little bit was what we’re seeing with some of the challenges. Applying negative sentiment over what seems to be either a neutral or a positive experience. And that can obviously impact your impact score a little bit, right? And so having to continue to refine, and this is why we really emphasize the evolution of your programs and not just turning it on and hoping for the best and expecting everything to work out.

That tends to be the biggest thing, and that’s why I start to recommend this sentiment override. I think the ideal nature of our evolution will be when people are going in dedicated people. We don’t want anybody going in, but when they’re updating that’s gonna reflect your own specific model. It’s gonna train the model of TA within your instance.

I added that to the roadmap. I don’t think you know that. I just did it. You just did that? Yeah. It’s gonna be on the roadmap. Perfect. Lulu, do you want to address in the last minute and a half [00:39:00] or so? Yeah, I think one

Lulu Tai: of the common challenges that we hear from our more mature or experienced clients is that, TA can be a little bit manual, right?

Mikal make a joke. Joke, made a joke about how. We think that we’ll never have to read comments again. Definitely we’ll still have to read comments, but I think there’s improvements that can be made to help us read comments faster, update our topics faster, update sentiment faster, and that’s where a lot of our Gen AI improvements are gonna help us.

The future of the product roadmap is looking at. Themes. We saw themes this morning in the keynote. Themes is gonna be a much more actionable, it’s gonna provide more insights so that we don’t have to rely on our topics as much topics is gonna be there, their values are gonna be there, but themes is gonna help us act more fast.

It hasn’t been announced yet, but we are working on model based topics. So what does that look like in the future? How can we leverage machine learning and AI to help us? Build topics faster. That’s later on in the product roadmap. So that’s why it’s not been discussed. But that is something that Medallia is looking at to help us fine tune and work on our TA faster and more efficiently.

Q+A (5): [00:40:00] From what I, using Medallia for a long time, I use like topics and themes and sometimes we create surveys based on what we receive are really bad feedbacks in themes and also in topics. And we have a lot of questions, especially with, executive, they always ask me questions like, we, we have these themes and topics, and with the impact scores that it’s hitting your account.

They always ask me a question, if I fix this one over here, would the impact score will promote my NPS course? That’s one. And second was, is there a relationship between topics and themes with the forecasting? NPS Oh, no, because they always asking about forecasting. NPS.

Mikal Reagan: The first question, if I heard that correctly, I’m taking all the softballs, I’m sorry, Louis is yes, ideally, right?

That’s the nature of impact score. If you address whatever that particular issue, if I heard you correctly, yeah. Whatever that particular issue is, you should see your net promoter score go up. It’s not gonna be a direct correlation because this is historical, [00:41:00] right? It’s looking at all the records that have come in and what the impact to your NPS would’ve been had you eliminated that.

Moving forward, you can expect some sort of uptick roughly around what that impact score, but don’t tell your leaders it’s gonna be an exactly a 9.8 point increase if your impact score is 9.8, if that makes sense. Makes sense. And then the second question,

Lulu Tai: forecasting NPS.

Mikal Reagan: Forecasting NPS,

Lulu Tai: don’t we all wish that we can do that?

Yeah,

Mikal Reagan: I look at that. We’re outta time. I think we want to get there, right? I think the nature of CX as it continues to evolve is to be more predictive, right? To forecast what it could be. I think there’s a lot of contextual elements within that. Now there was an organization that did create kind of a model out on this, but it wasn’t so much tied to text analytics as tied to the score, and they could within about a 95% confidence, predict what that looks like.

Yeah. Is it there? Yeah. Are we gonna have the [00:42:00] models anytime soon?

Q+A (5): Probably not. I create the send of stand of deviations for the, for forecasting? Yeah. Until it was like 95% you’re gonna be in that area. 99% may be there. Sometimes it works. Sometimes the executive, they don’t agree upon the standard deviation.

But if there is a Medallia forecasting based on topics and themes, that would be great. Sorry.

Mikal Reagan: No, that’s good. That’s good. I appreciate the discussion. I think that is our time though now.

Lulu Tai: That is

Mikal Reagan: really appreciate the engagement. Appreciate the discussion. Thank you.